Secure AI for Every Fintech Workflow

%20(1).png)

%20(1).png)

Runtime AI Security Platform for Fintech AI Protect every transaction , workflow and model

Runtime AI Visibility

Map every AI model, agent, prompt, API call, and data flow across your fintech stack, from onboarding journeys to transaction monitoring. Understand exactly where financial data goes, which vendors see it, and which workflows touch PCI or KYC sensitive fields.

AI Monitoring & Governance

Continuously track AI behaviors, responses, and model drift across fraud engines, scoring pipelines, and support flows. Enforce data governance and ensure outputs align with business policy and regulatory guidelines.

AI Threat Detection

Identify prompt injection, data leakage attempts, anomalous scoring behavior, compliance violations, agent misuse, and suspicious API workflows in real time. Detect fraudsters attempting to exploit AI-based systems or probe thresholds.

AI Threat Protection

Block unsafe prompts, prevent sensitive data from exiting secure environments, restrict agent actions, and enforce policy-as-code across every AI touchpoint, including payment APIs, identity verification flows, and underwriting systems.

.png)

AI Red Teaming

Simulate adversarial prompts, fraud attacks, agent exploits, threshold probing, and data manipulation on staging environments. Validate resilience against real attacker techniques before deploying to production.

.png)

Built for Fintech Teams Who Need Trust and Speed

Safely ship AI features into payments, fraud, KYC, underwriting, and CX without building custom guardrails. Levo secures your fintech AI stack so engineering can innovate, not manage risk plumbing.

Gain complete visibility into AI across financial workflows: prompts, agents, fraud models, APIs, and third party integrations. Enforce consistent guardrails without slowing transaction throughput or customer onboarding.

Prove that AI usage aligns with PCI-DSS, AML/KYC, privacy laws, and model governance expectations. Levo provides audit-ready logs, policy enforcement, and runtime controls so teams can deploy AI confidently.

Secure AI Across every fintech workflow with Levo.

Frequently Asked Questions

Got questions? Go through the FAQs or get in touch with our team!

What is AI security for fintech?

AI security for fintech refers to the practices, tools, and policies that protect AI-powered applications in financial services from threats and misuse. It focuses on securing the machine learning models, data, and AI-driven workflows that fintech companies use. This means defending against issues like unauthorized data access, model manipulation, fraud exploitation, and compliance violations unique to AI systems. Essentially, AI security in fintech ensures that AI models (whether used for fraud detection, customer service, or trading) operate safely, reliably, and in line with regulatory requirements, just as traditional cybersecurity protects conventional IT systems.

Why is AI security important for fintech companies?

Fintech companies deal with sensitive financial data and operate under strict regulations, so the stakes are extremely high. While AI brings big upsides (faster decisions, better fraud detection, personalized services), it also expands the threat surface. An unsecured AI can leak private customer data or make a compliance error in a millisecond. For example, an AI glitch or attack could approve fraudulent transactions or expose confidential information, leading to direct financial losses and regulatory penalties. In 2024, global banks were fined billions for compliance failures, and financial fraud cost businesses tens of billions annually. AI security is therefore vital to prevent breaches, avoid multi-million dollar fines, and preserve customer trust. In short, it’s important because it allows fintechs to reap AI’s benefits (speed, efficiency, insight) without triggering the catastrophic downsides of a security incident or compliance breach.

How are fintech companies using AI today?

Fintech firms are leveraging AI across a wide range of use cases. Prominent examples include: fraud detection systems that analyze transaction patterns to flag scams in real time, credit scoring algorithms that assess loan risk more accurately using alternative data, KYC/AML compliance tools that automatically verify identities and monitor for suspicious activity, chatbots and virtual assistants that handle customer service inquiries 24/7, and even algorithmic trading and robo-advisors for investment management. In practice, AI helps fintechs automate loan approvals, personalize financial advice, optimize risk models, and enhance transaction security. The business impact has been significant – for instance, AI can process documents and onboarding checks in minutes instead of weeks, catch more fraud with fewer false alarms, and enable highly personalized user experiences at scale. This widespread use of AI is reshaping fintech services to be smarter, faster, and more efficient.

What new risks does AI introduce to fintech cybersecurity?

Alongside its benefits, AI introduces several new risk dimensions for fintech: Data privacy risks arise if AI models inadvertently expose sensitive customer data (for example, an AI might “remember” and reveal a credit card number). Adversarial attack risks mean bad actors can manipulate AI inputs. Imagine prompt injection attacks on a banking chatbot, causing it to divulge private info or bypass controls. Model exploitation risks involve fraudsters using AI to probe and evade your defenses (for instance, using deepfakes to trick KYC verifications or finding transaction patterns that slip past an ML fraud detector). There are also governance and compliance risks: AI’s predictive, black-box nature can make it hard to explain decisions or ensure rules are followed. This could lead to compliance violations (e.g. an AI denying loans in a discriminatory way without explanation). In summary, AI can become a double-edged sword – if not secured, it can create new attack vectors, from intelligent fraud evasion to inadvertent data leaks, which traditional cybersecurity doesn’t cover. That’s why specialized AI security measures are essential to address these novel threats.

How can fintech firms ensure AI systems comply with regulations like PCI-DSS, KYC/AML, and data privacy laws?

Ensuring compliance for AI systems starts with embedding strong governance and controls around data and model usage. For privacy laws (GDPR, banking data regulations), fintechs need to prevent AI from exposing personal data. For example, by redacting or encrypting sensitive fields so that no credit card numbers or personal identifiers appear in AI outputs (a must for PCI-DSS). All AI inputs and outputs should be logged and auditable to satisfy regulators that you know what your AI is doing with customer data. For KYC/AML, it’s important that AI-driven decisions (say flagging a transaction or onboarding a client) are explainable and documented – regulators will ask to show why an AI cleared a high-risk customer or how it detected money laundering patterns. Compliance teams should define policies (e.g. “AI must not use customer data beyond X purpose” or “no transactions above $Y without human review”) and enforce them through AI security tools. Regular testing and validation of AI models is also key, to ensure they aren’t inadvertently biased or breaking any rules. In short, AI security and compliance go hand in hand: by controlling what data goes into models, monitoring outcomes, and keeping detailed audit trails, fintechs can use AI while meeting obligations under PCI-DSS, AML/KYC regulations, and data protection laws. Many fintechs also align with frameworks like the NIST AI Risk Management Framework or ISO AI standards to systematically address these compliance requirements.

How do we govern and secure different AI systems (LLMs, agents, RAG pipelines, APIs) in fintech?

Effective AI governance in fintech means having oversight and controls for every type of AI system in your stack. For LLM-based chatbots or advisors, you need runtime safeguards. For example, filtering prompts and responses to block toxic or sensitive content, and preventing them from executing unauthorized actions.

For autonomous AI agents (AI programs that can initiate transactions or API calls), governance requires strict permissioning: sandbox their access, enforce role-based constraints, and monitor their activities continuously so an “AI employee” can’t go rogue.

Retrieval-Augmented Generation (RAG) pipelines, which combine AI with your data sources, demand extra scrutiny: you should monitor what data the AI is retrieving, control the vector database access, and validate outputs against source data to avoid misinformation.

Finally, securing AI-centric APIs involves applying traditional API security best practices (authentication, rate limiting, anomaly detection) plus AI-specific checks – for instance, detecting abnormal usage patterns that might indicate someone is querying an ML model to infer sensitive data.

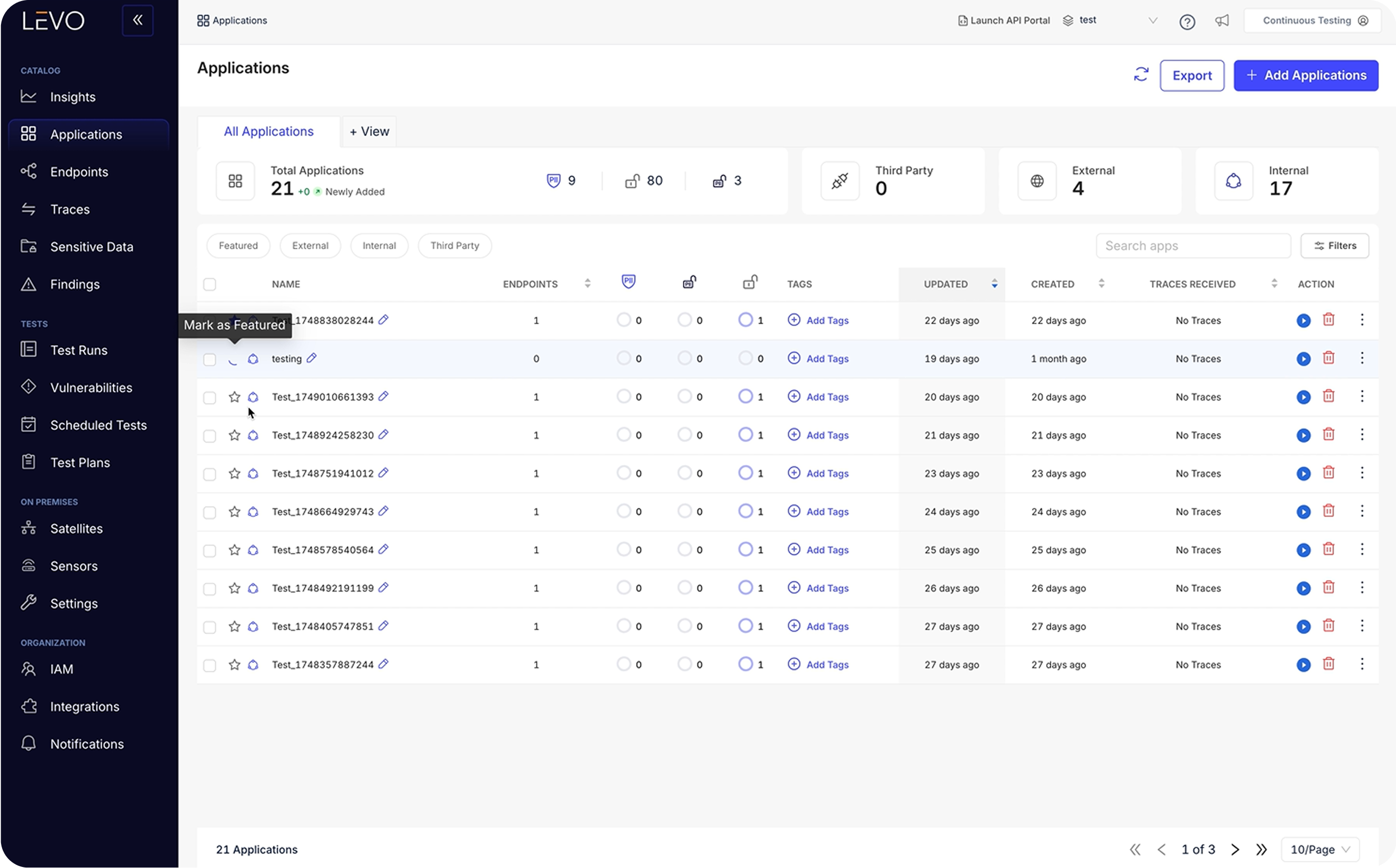

In practice, fintech leaders are establishing unified AI governance frameworks that span these components. This might include an AI security platform that inventories all AI assets (models, agents, third-party AI services), applies consistent policies (like data masking or output validation) across them, and gives a real-time dashboard of AI activities and risks.

By governing LLMs, AI agents, RAG, and APIs under one security umbrella, fintechs ensure that innovation doesn’t outrun oversight.Can’t our existing security tools protect our AI applications?

Traditional security tools (firewalls, web app scanners, DLP, IAM, etc.) are necessary but not sufficient for AI-driven applications. They were built for static rules and known attack patterns, whereas AI models behave differently and can generate unpredictable outputs.

For example, a Web Application Firewall won’t catch a cleverly crafted prompt that causes an AI to leak data, because it doesn’t “understand” AI instructions. Likewise, standard DLP might miss sensitive info if it’s embedded in an AI-generated response.

In fact, conventional security products “don’t understand prompts, embeddings or agent chains” and thus struggle to detect or stop novel AI-specific attacks. This is why we’re seeing the rise of specialized AI security solutions that fill the gaps.

The good news is these AI security measures can integrate with your existing stack – for instance, by sending AI incident logs to your SIEM, or by acting as a policy layer on top of your AI APIs.

But expecting legacy tools alone to secure AI is risky; it’s a bit like using a spam filter to catch a sophisticated phishing campaign, the technology mismatch leaves blind spots. In summary, you likely need dedicated AI-aware controls (for input validation, output monitoring, model protection, etc.) that work alongside your existing cybersecurity infrastructure.What are best practices for managing AI risks in fintech?

Based on emerging industry standards, some best practices for AI risk management in fintech include: 1) Identify and inventory all AI systems – you can’t secure what you don’t know about, so catalog every model, dataset, and AI integration in use (including third-party AI services). 2) Implement strict data controls – enforce policies so that sensitive data (e.g. personal info, account numbers) is adequately protected when used in AI workflows (think encryption, masking, and role-based access to data). 3) Establish an AI governance committee or process – involve compliance, security, and business stakeholders to review AI use cases, approve new deployments, and monitor outcomes. 4) Secure the AI supply chain – vet any external models or APIs, apply security scans to models (similar to code audits), and keep models updated/patched like you would software. 5) Embed policy checks and monitoring at runtime – for instance, use an AI gateway or middleware that can intercept requests and responses to apply rules (blocking disallowed inputs, redacting outputs, rate-limiting calls, etc.). 6) Continuously test and stress-test – perform regular adversarial testing on your AI (e.g. try prompt injection attacks in a safe environment, or feed edge-case inputs) to see how it holds up, and address any vulnerabilities before attackers find them. 7) Train your staff and set clear usage guidelines – ensure that employees using AI tools (like an analyst using ChatGPT) understand the dos and don’ts (for example, not to paste customer data into an unapproved AI service). By following these best practices, fintechs create a culture and framework of “secure AI by design”, where innovation can proceed but within guardrails that protect the company and its customers.

Will adding AI security slow down innovation?

No, Levo’s AI Security Platform is designed to run in real time without adding friction to user flows or engineering workflows. The right controls operate in milliseconds, so transactions, chats, and risk checks stay fast. More importantly, AI security removes the bottlenecks that slow launches by giving compliance and security teams confidence in new features. Instead of delaying projects over data handling or safety concerns, teams can ship AI products sooner. Security becomes a speed enabler, not a blocker.

How does AI security help us ship AI features faster?

When security, compliance, and risk teams trust the guardrails around AI, approvals happen dramatically faster. With automated policies for data handling, output filtering, and vendor controls, teams avoid long rounds of manual review. This means AI-driven onboarding, fraud workflows, lending, and CX tools reach customers quicker. Engineers can iterate without fear of leaks or missteps, and products can scale AI with less internal resistance. The result is shorter cycles from idea to deployment.

We already use AI for fraud detection, why do we need AI security too?

Fraud models are critical assets, and attackers increasingly target them directly. Without protection, adversaries can probe your scoring engine, learn its behavior, and slip high-value fraud through. Models can also drift or degrade without monitoring, quietly weakening your defenses. AI security ensures your fraud models stay accurate, protected, and tamper-resistant. It safeguards the very AI systems you rely on to stop financial loss.

Can fraudsters really attack or manipulate our AI systems?

Yes, fraudsters now use automation and AI to evade detection with unprecedented precision. They test prompts, payloads, and transaction patterns to find weak spots in scoring engines and chat-based support flows. Deepfakes and synthetic IDs can fool unguarded AI-driven KYC steps. Attackers can also poison training or inference data to blind your models over time. AI security closes these gaps so fraud prevention stays several steps ahead.

How does Levo secure AI in fintech environments?

Levo enforces guardrails around every AI interaction, filtering unsafe prompts and blocking sensitive financial data from leaking into models or vendors. It monitors AI behavior in real time across fraud, onboarding, payments, and CX workflows to catch misuse early. Policy-as-code lets teams define what AI can or cannot do—like restricting transactions or preventing certain data flows. Levo also provides deep visibility into model decisions, agent actions, and API calls. The platform keeps every AI workflow safe, consistent, and compliant.

How does Levo help with PCI, AML, GDPR, SOC 2, and fintech compliance?

Levo automatically redacts regulated data, ensuring cardholder and personal information never passes through unauthorized AI systems. It logs every prompt, output, and model interaction, giving compliance teams tamper-proof audit trails. Policy controls enforce residency, masking, and minimization requirements at runtime. Levo helps demonstrate oversight and governance for AML and risk-based decisions by making AI actions explainable. It enables fintechs to scale AI without violating core regulatory obligations.

What makes Levo different from our existing security tools?

Traditional security products don’t understand prompts, embeddings, models, or AI agents—they protect apps, not AI behavior. Levo adds a missing layer that inspects AI inputs, outputs, and actions in real time. It detects prompt injections, misuse, unsafe outputs, identity drift, and anomalous AI behavior that firewalls and IAM tools can’t see. This AI-native visibility and control sit alongside your existing stack without replacing it. Levo closes blind spots that legacy tools were never designed to handle.

Can Levo handle security for third-party AI vendors and fintech APIs?

Yes. Levo discovers and monitors all AI and API activity, including interactions with KYC vendors, fraud APIs, and external LLMs. It enforces policies on what data can be shared with each vendor and prevents regulated data from leaving approved environments. In real time, Levo can block unsafe prompts, restrict API triggers, and catch vendor side misbehavior. It ensures third party models don’t introduce compliance exposure or data leakage. You stay fully in control even when AI workloads rely on external providers.

What are the first steps to start securing AI in a fintech org?

Begin by inventorying all AI systems, from fraud models and chatbots to underwriting engines and vendor APIs. Identify which workflows touch regulated, financial, or personal data. Introduce basic guardrails, like prompt filtering, data redaction, and access controls, on the highest-risk flows first. Establish a cross-functional review with security, compliance, and engineering to evaluate AI use cases consistently. These foundations make it far easier to expand security as AI adoption grows.

How do we operationalize AI security without slowing teams down?

The key is embedding security at runtime, not in development bottlenecks. Automated policies handle data masking, vendor routing, and safe output generation without adding manual checks. Real-time monitoring lets teams catch issues early instead of pausing projects after incidents. Developers can integrate AI safely without learning new tools or processes. This approach keeps product velocity high while ensuring every AI workflow is governed and compliant. Security becomes a built-in layer, not a speed bump.

How is sensitive data protected?

Gateways and firewalls see prompts and outputs at the edge. Levo sees the runtime mesh inside the enterprise, including agent to agent, agent to MCP, and MCP to API chains where real risk lives.

How is this different from model firewalls or gateways?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

What operational insights do we get?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

Does Levo find shadow AI?

Yes. Levo surfaces unsanctioned agents, LLM calls, and third-party AI services, making blind adoption impossible to miss.

Which environments are supported?

Levo covers LLMs, MCP servers, agents, AI apps, and LLM apps across hybrid and multi cloud footprints.

What is Capability and Destination Mapping?

Levo catalogs agent tools, exposed schemas, and data destinations, translating opaque agent behavior into governable workflows and early warnings for risky data paths.

How does this help each team?

Engineering ships without added toil, Security replaces blind spots with full runtime traces and policy enforcement points, Compliance gets continuous evidence that controls work in production.

How does Runtime AI Visibility relate to the rest of Levo?

Visibility is the foundation. You can add AI Monitoring and Governance, AI Threat Detection, AI Attack Protection, and AI Red Teaming to enforce policies and continuously test with runtime truth.

Will this integrate with our existing stack?

Yes. Levo is designed to complement existing IAM, SIEM, data security, and cloud tooling, filling the runtime gaps those tools cannot see.

What problems does this prevent in practice?

Prompt and tool injection, over permissioned agents, PHI or PII leaks in prompts and embeddings, region or vendor violations, and cascades from unsafe chained actions.

How does this unlock faster AI adoption?

Levo provides the visibility, attribution, and audit grade evidence boards and regulators require, so CISOs can green light production and the business can scale AI with confidence.

What is the core value in one line?

Unlock AI ROI with rapid, secure rollouts in production, powered by runtime visibility across your entire AI control plane.

Show more