Secure AI for Banking With Levo’s Unified AI Security Platform

%20(1).png)

%20(1).png)

Levo: End‑to‑End AI Security for Banking AI

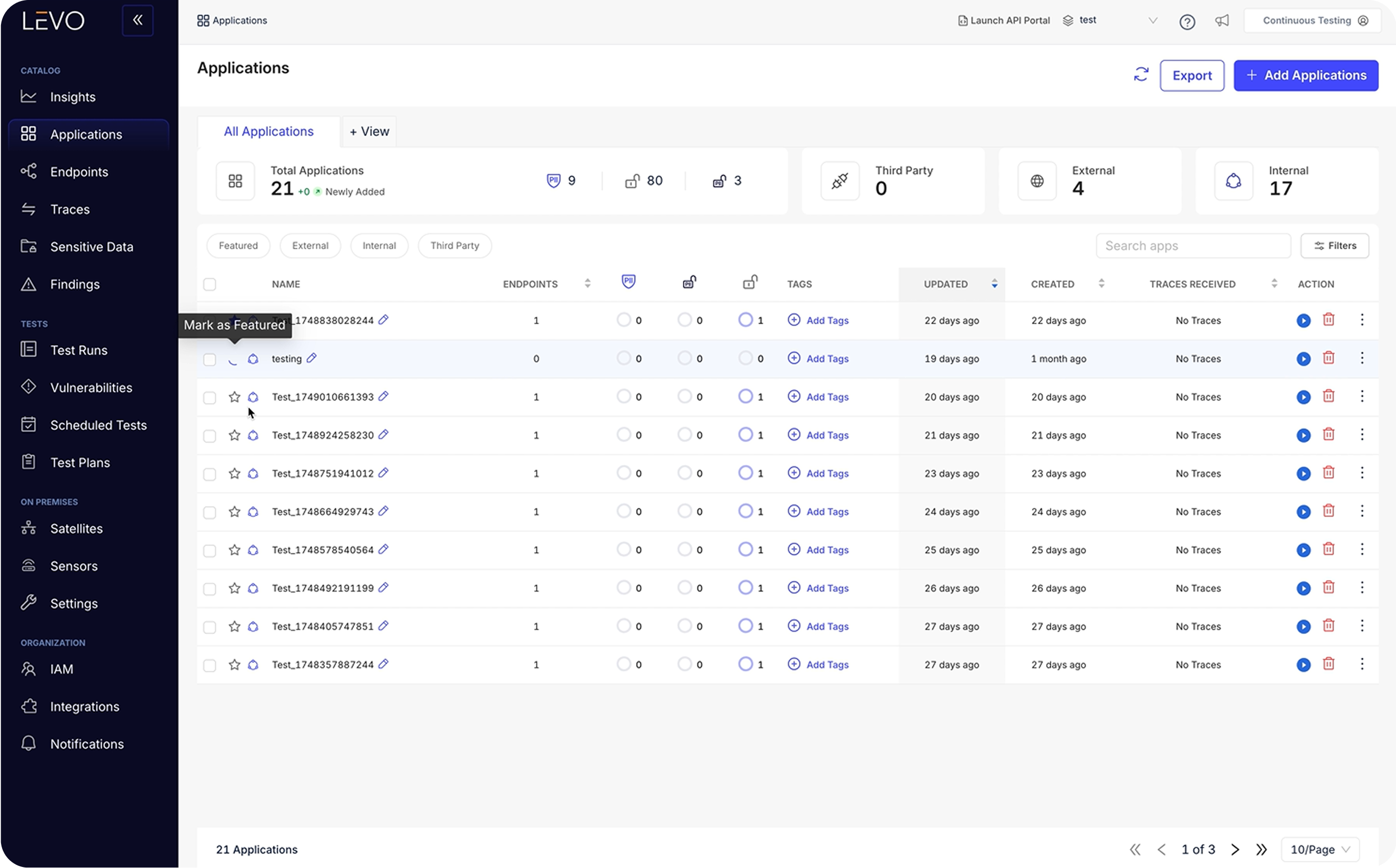

Runtime AI Visibility

Map every model, agent and call across core banking systems, payment rails and risk engines. Understand who is using which AI tools, what data they access and how outputs influence transactions.

AI Monitoring

Continuously monitor prompts, model outputs and chain of AI calls across fraud‑detection pipelines, chatbots and banking APIs. Alert security teams when outputs violate policies or drift from expected behavior.

AI Threat Detection

Detect AI‑specific threats like prompt injection, data poisoning and deepfake‑driven fraud attempts targeting payment systems and AML/KYC workflows. Identify malicious API calls and unauthorized access before they cause financial or reputational damage.

AI Threat Protection

Enforce banking policies in real time. Redact PCI/PII and account data from prompts and responses, block risky tool calls (e.g., unsanctioned payment requests), and apply role‑based and geo‑based restrictions to satisfy PCI and AML regulations.

.png)

AI Red Teaming

Safely stress‑test fraud scoring engines, credit decision models and banking chatbots with adversarial prompts and deepfake scenarios. Expose bias, model drift and security vulnerabilities before deploying to production.

.png)

Accelerate banking innovation with confidence.

Ship banking AI models and agents faster without compromising safety. Simplify integration into core banking, APIs and data platforms; focus on innovation while Levo handles AI governance.

Gain runtime visibility into AI across core banking and digital channels, integrate AI security into existing SOC workflows and demonstrate compliance with banking cybersecurity frameworks.

Align AI deployments with AML/KYC, PCI, DORA and EU AI Act requirements. Create auditable records, enforce policy, and accelerate

approvals across fraud detection, credit scoring and customer‑experience programs.

Frequently Asked Questions

Got questions? Go through the FAQs or get in touch with our team!

What is AI Security for Banking?

AI Security for Banking encompasses tools and governance processes that protect AI models, agents and data flows across core banking, payments, risk scoring AI and customer-facing chatbots. It ensures confidentiality, integrity and compliance while enabling innovation.

Why do banks need AI‑specific security?

AI introduces new risks that traditional controls can’t see: untrusted inputs, prompt injection, model drift and AI‑enabled deepfake fraud. Financial institutions face data leaks and regulatory violations when employees use unapproved tools or when models hallucinate. AI security solutions monitor and govern prompts, embeddings and outputs—capabilities missing in WAFs, SIEMs and DLP.

How are fraudsters using AI against banks?

Attackers weaponize generative AI to create voice and video deepfakes, hyper‑personalized phishing and automated exploits. Deepfake fraud cases surged 1,740% and caused more than $200 M in losses in early 2025. AI can also probe fraud‑detection models to find thresholds and exploit them. Without AI‑aware defenses, banks are vulnerable to large‑scale fraud and reputation damage.

What is “shadow AI” and why is it dangerous?

Shadow AI refers to employees using unapproved AI tools like generative AI chatbots, transcription apps or third‑party plug‑ins, without oversight. In banking, over a third of client interactions may already involve AI outside sanctioned systems. This practice leaks sensitive account data, violates PCI and AML rules and undermines governance. Continuous discovery and monitoring are essential.

How do Levo’s guardrails prevent data exposure?

Levo inspects prompts and responses at runtime, redacts PCI/PII and account information and enforces role‑based and geographic policies. It detects unauthorized data flows between models, agents and banking APIs, preventing hidden SaaS AI features from exposing data.

Can AI models be biased or unexplainable?

Yes. AI risk models can embed human bias, drift over time or make opaque decisions. Regulators require banks to explain credit scores, AML alerts and fraud flags. Levo’s runtime monitoring and audit logs provide explainability and traceability of prompts, decisions and outcomes, supporting fair lending and model risk management.

How does Levo handle third‑party AI and supply‑chain risk?

Banks often rely on cloud‑based AI services and fintech vendors. The OECD warns that third‑party dependencies can create systemic risk. Levo discovers and inventories every AI tool, monitors data flows to external APIs and enforces vendor‑specific policies. It alerts on suspicious behavior and ensures compliance with NCUA, OCC and EU AI Act requirements.

What regulations apply to AI in banking?

Multiple frameworks are emerging. The EU AI Act classifies credit scoring as high‑risk and can fine institutions up to €40 M or 7% of revenue. DORA mandates monitoring of ICT and AI incidents, while U.S. regulators like the OCC, SEC and FDIC require model risk management and layered defenses. Levo maps runtime behavior to these requirements, helping banks prove compliance.

How does Levo secure generative AI chatbots and assistants?

Banking chatbots and virtual assistants must protect customer data and comply with PCI and privacy laws. Levo monitors conversations, filters sensitive information and blocks prompt injection or jailbreak attempts. It logs every interaction for audit trails, ensuring that LLM security for banking chatbots meets compliance.

What about AML/KYC and risk‑scoring AI?

AML and risk‑scoring AI systems require high sensitivity to detect suspicious activity without generating bias. Levo’s runtime visibility covers models that handle AML/KYC and risk scoring, detecting anomalies, preventing data poisoning and ensuring that only authorized data is used. It supports explainability so that banks can justify risk scores to regulators.

How do Levo’s AI red‑team tests work?

Levo’s AI red teaming simulates adversarial prompts, data poisoning and deepfake scenarios against fraud engines, credit models and banking chatbots. The platform identifies weaknesses before deployment and recommends control improvements, helping banks meet the BIS, FSB and ECB expectations for robust risk management.

How can banks secure AI agents connected to core banking and payment APIs?

AI agents can trigger actions across CRMs, payment rails or customer-service systems, which makes unsecured agents a high-risk entry point. Banks should implement real-time monitoring, enforce least-privilege access, and block unsafe tool calls to prevent unauthorized transactions or data exposure. AI Security for Banking platforms like Levo provide runtime policy enforcement and oversight to keep AI agents aligned with compliance and operational rules.

How does AI security strengthen AML and KYC systems?

AML AI tools and KYC workflows rely on sensitive identity and transaction data, making them targets for data poisoning, prompt manipulation and evasion techniques. AI security adds protections that validate inputs, detect anomalies, and prevent tampered or misleading data from influencing AML alerts. Banks gain higher detection accuracy, fewer false negatives and auditable, regulator-ready AI behavior across all AML models.

What should banks look for in the best AI security tools for banking?

Financial institutions should prioritize platforms that offer runtime AI visibility, policy enforcement, sensitive data protection, monitoring across model chains and integration with existing SOC tools. They should also support banking-specific requirements like PCI handling, AML/KYC compliance and explainability for credit scoring AI. Solutions like Levo combine AI threat detection, governance and protection into one unified control layer.

How does AI security support LLM chatbots and virtual assistants in banking?

Banking chatbots powered by LLMs must prevent PCI/PII leakage, avoid hallucinated account information and comply with strict customer-communication rules. AI security tools monitor every prompt and response, detect unsafe patterns, and apply guardrails that block unauthorized data or policy violations. This ensures LLM security for banking chatbots while improving trust, accuracy and customer experience.

How is sensitive data protected?

Gateways and firewalls see prompts and outputs at the edge. Levo sees the runtime mesh inside the enterprise, including agent to agent, agent to MCP, and MCP to API chains where real risk lives.

How is this different from model firewalls or gateways?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

What operational insights do we get?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

Does Levo find shadow AI?

Yes. Levo surfaces unsanctioned agents, LLM calls, and third-party AI services, making blind adoption impossible to miss.

Which environments are supported?

Levo covers LLMs, MCP servers, agents, AI apps, and LLM apps across hybrid and multi cloud footprints.

What is Capability and Destination Mapping?

Levo catalogs agent tools, exposed schemas, and data destinations, translating opaque agent behavior into governable workflows and early warnings for risky data paths.

How does this help each team?

Engineering ships without added toil, Security replaces blind spots with full runtime traces and policy enforcement points, Compliance gets continuous evidence that controls work in production.

How does Runtime AI Visibility relate to the rest of Levo?

Visibility is the foundation. You can add AI Monitoring and Governance, AI Threat Detection, AI Attack Protection, and AI Red Teaming to enforce policies and continuously test with runtime truth.

Will this integrate with our existing stack?

Yes. Levo is designed to complement existing IAM, SIEM, data security, and cloud tooling, filling the runtime gaps those tools cannot see.

What problems does this prevent in practice?

Prompt and tool injection, over permissioned agents, PHI or PII leaks in prompts and embeddings, region or vendor violations, and cascades from unsafe chained actions.

How does this unlock faster AI adoption?

Levo provides the visibility, attribution, and audit grade evidence boards and regulators require, so CISOs can green light production and the business can scale AI with confidence.

What is the core value in one line?

Unlock AI ROI with rapid, secure rollouts in production, powered by runtime visibility across your entire AI control plane.

Show more