Levo’s AI Firewall to protect in-house AI

end-to-end

%20(1).png)

%20(1).png)

Custom AI is transformative, and

catastrophic if left unmanaged

Teams spin up separate assistants, agents, and RAG pipelines across different tools and environments. Governance becomes fragmented, controls vary by team, and risk accumulates quietly. Leadership hesitates to scale what it can’t standardize or confidently oversee.

AI interactions traverse multiple systems, yet most enterprises can’t trace what the AI accessed, what it invoked, and what it returned. Without a clear execution chain, incident response becomes slow and approvals become conservative. This uncertainty is one of the biggest blockers to broad deployment.

As soon as AI touches regulated or proprietary data, the burden shifts to proving safeguards, enforcing policy, and maintaining auditability. Without built-in controls, every launch becomes a negotiation between engineering speed and risk tolerance. The result is delayed productionization and reduced ambition in what AI can safely do.

AI at scale amplifies spend across compute, tokens, and operational overhead. Without guardrails that constrain actions and data flows, usage becomes hard to predict and easy to abuse. When costs rise faster than value, expansion slows, even if the pilot was successful.

AI features often sit in high-volume user journeys where performance is non-negotiable. If security requires complex routing, adds unpredictable delays, or breaks workflows, teams pull back. That creates a false choice: ship quickly or protect fully, rarely both.

WAFs, DLP, IAM, and SIEMs were built for predictable requests, known endpoints, and direct user intent. AI workflows are dynamic: they evolve across turns, invoke tools, and trigger chains of API calls and data retrieval. When tools can’t see or control that execution path, enterprises can’t confidently scale AI, even when the business case is clear.

Ship AI to production with

confidence, not compromise

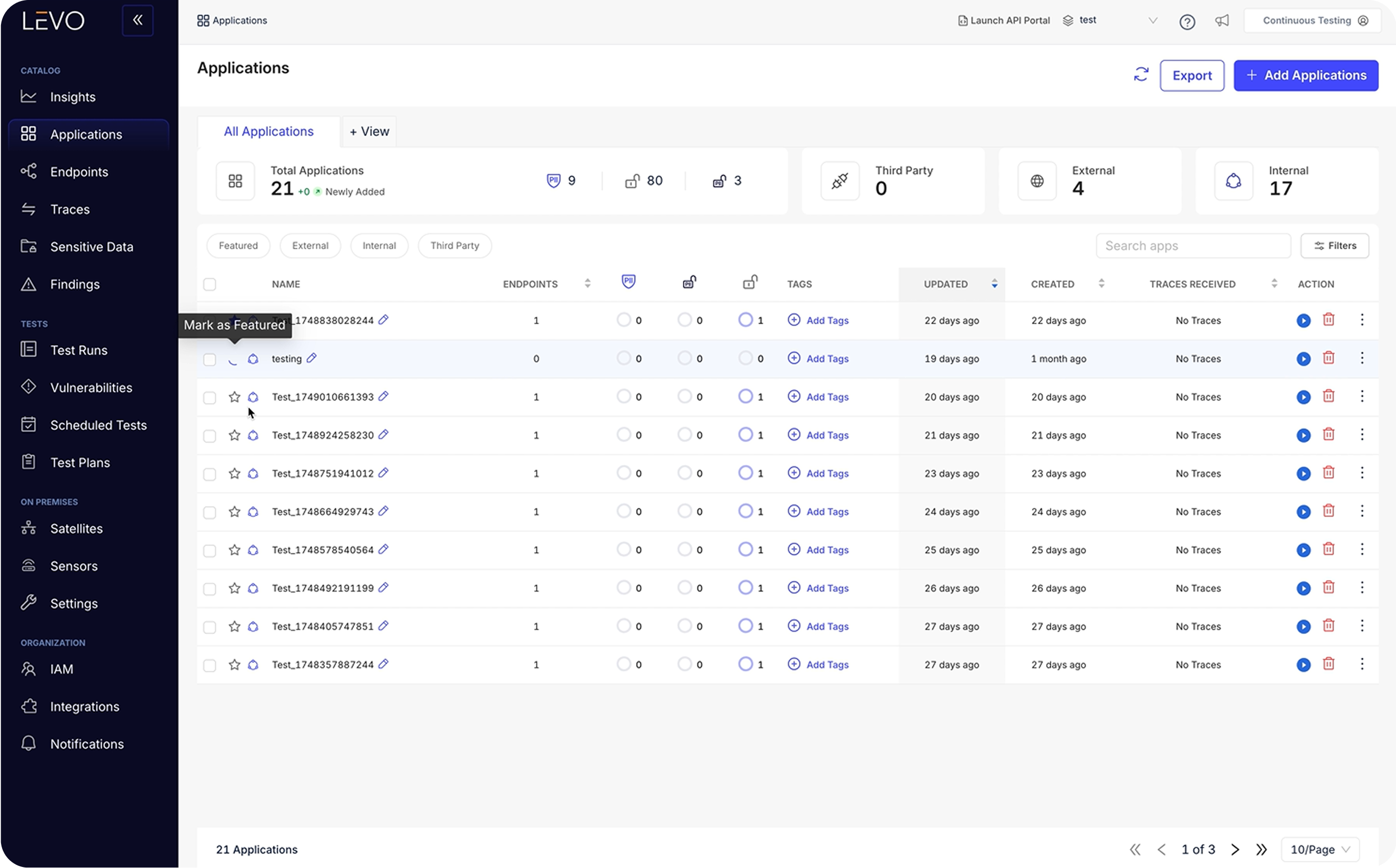

End-to-End Transaction Visibility and Coverage

Levo gives complete visibility into every AI transaction across prompts, agents, tool calls, API calls, and downstream actions. This replaces guesswork with evidence: what was accessed, what was invoked, what was returned, and why enforcement occurred. Visibility also reduces internal friction. This clarity accelerates approvals and shortens the path from release to scale.

Custom Guardrails

Levo lets you codify enterprise policy as enforceable guardrails: simple where it should be simple, powerful where it needs to be precise. You can roll policies out progressively (monitor → alert → block) to avoid disruption while still moving quickly. Guardrails are auditable and consistent across teams, so governance doesn’t become a bespoke, fragile process per application.

DLP for AI

Levo applies AI-aware data protection inline to prevent sensitive data from being exposed through prompts or responses. Controls can redact, mask, block, or route events to workflows based on risk and business context. This reduces the most common enterprise adoption blocker without forcing teams to stop innovating. With DLP built into the execution path, compliance becomes a built-in property of deployment, not a last-minute audit exercise.

System Prompt Leakage Protection

Levo prevents sensitive system prompts and internal instructions from leaving your environment, even when users try to coax them out indirectly. It detects leakage patterns in real time and applies masking or policy actions before responses are returned. This protects proprietary logic and reduces the fear of exposing “how your AI works” to the outside world. With that risk contained, teams can ship more capable assistants without watering down functionality.

.png)

Agent Tool-Use Policy Enforcement

Levo enforces clear boundaries on what agents can do, which tools they can call, and what parameters they can pass, inline and in real time. Policies are designed to be explicit, reviewable, and easy to approve, so agent capabilities don’t become liabilities. When a request pushes an agent outside defined scope, Levo blocks or constrains the action before it reaches downstream systems. That makes agentic AI safe to expand from internal pilots to customer facing workflows.

.png)

The value of governed third-party AI spans every enterprise team

Stop letting late stage security concerns derail releases. Launches move from “approval-heavy” to repeatable, and teams spend less time reworking integrations, rewriting prompts, or defending AI behavior after incidents.

Reduce the daily burden of manual reviews, fragmented controls, and unclear accountability across agents, tools, and APIs. AI Firewall provides end-to-end visibility and inline enforcement across the full execution chain

Replace one-off escalations with a consistent governance model. AI Firewall applies auditable guardrails, prevents sensitive data exposure, and maintains traceable records of access and outputs across AI transactions.

Productionize in-house AI with confidence, not compromise.

Frequently Asked Questions

Got questions? Go through the FAQs or get in touch with our team!

What is an AI firewall, and why do I need one?

An AI firewall is a security layer designed to protect artificial intelligence systems, particularly language models and AI agents, from misuse and data leakage. Unlike traditional firewalls that inspect network traffic, an AI firewall examines the inputs, outputs and downstream actions of AI applications to enforce policies and prevent sensitive information from being exposed. It is essential because AI can process proprietary data, make decisions, and trigger actions in business systems: introducing new risks that traditional tools can’t see. Levo’s AI Firewall goes beyond simple filtering; it monitors the entire chain of prompts, agents, tools and APIs, making it practical for in‑house AI that needs robust protection without slowing down innovation.

How is an AI firewall different from a traditional firewall?

Traditional firewalls focus on network traffic: controlling ports, IP addresses and protocols, to block known bad actors. An AI firewall, on the other hand, inspects the content and behavior of AI applications. It looks at prompts, responses and API calls to detect misuse, data leakage or unauthorized actions that wouldn’t show up in a packet header. In short, a traditional firewall guards the “data plane,” while an AI firewall guards the “AI plane.” Levo’s AI Firewall combines both: it sits inline, understands AI context and agent behavior, and applies policies across prompts and downstream tool calls to keep AI outcomes safe.

How does an AI firewall protect generative AI applications like ChatGPT or other large language models?

Generative AI systems produce text based on learned patterns, which means they can inadvertently reveal sensitive data or follow hidden instructions (prompt injection) embedded in user inputs. An AI firewall monitors each request and response, identifies attempted injection or sensitive content, and applies guardrails, such as redaction, blocking or rewriting to prevent data leaks or unsafe behavior. Levo’s AI Firewall enhances this by tracking how a request flows through agents and tools; it can block malicious instructions from external documents (indirect prompt injection), enforce tool limits and keep the AI aligned to policies, all while maintaining performance.

What is a prompt injection attack, and how can it be prevented?

Prompt injection is a technique where attackers embed malicious instructions into a prompt, retrieved document or tool output to trick a language model into ignoring its original instructions. This can cause the AI to leak secrets, produce harmful content or perform unauthorized actions. Prevention involves scanning the text before it reaches the model, detecting patterns that resemble hidden commands, and neutralizing or rejecting them. Levo’s AI Firewall does this by inspecting both direct user inputs and any external data fetched by agents or retrieval systems, blocking malicious instructions in real time.

Why is protecting AI models from attacks different from traditional cybersecurity?

AI models interpret natural language and produce varied outputs, so traditional signature‑based security tools can’t anticipate every malicious pattern. Attacks may appear as harmless sentences but cause the model to behave unexpectedly. Protecting AI requires understanding both the input and the context around it: what data the model can access, what tools it can call and how its responses are used downstream. Levo’s AI Firewall addresses this by applying policy controls at multiple levels: masking sensitive data in prompts and responses, enforcing agent tool use and monitoring API calls to prevent unauthorized actions that would bypass legacy security tools.

What does AI data leakage mean, and how is it prevented?

AI data leakage occurs when a model outputs sensitive or proprietary information that it should keep private, such as personal identifiers, financial records or internal instructions. This can happen if training data contains confidential content or if a model is manipulated into revealing system prompts. Preventing leakage requires classifying sensitive data, redacting or tokenizing it in real time and controlling what the AI can access. Levo’s AI Firewall integrates data loss prevention directly into the AI workflow, scanning prompts and responses for sensitive patterns and enforcing masking or blocking policies so that no private data leaves your environment.

What are the main security threats to large language models (LLMs)?

LLMs face several threats: prompt injection and prompt hijacking, indirect prompt injection from retrieved documents, jailbreaking (multi‑turn manipulation), system prompt leakage, and misuse of downstream tool or API access. These threats can lead to data exposure, compliance violations or unauthorized actions. Mitigations include inspecting inputs and outputs, enforcing tool use policies and maintaining full transaction visibility. Levo’s AI Firewall combines these controls with a single inline architecture, ensuring LLMs behave safely even when they interact with agents, tools and APIs across enterprise environments.

If I already have a web application firewall (WAF), do I need an AI firewall?

A WAF protects web applications by filtering HTTP traffic, but it cannot understand prompts, model context or downstream actions triggered by AI. It also lacks the ability to detect hidden instructions in user content or enforce limits on AI agents. An AI firewall complements your existing WAF by focusing on the unique risks of AI workflows: text inputs, model responses, agent tool calls and API interactions. Levo’s AI Firewall can coexist with traditional firewalls while providing AI-specific controls, ensuring comprehensive protection without forcing you to rip and replace your current stack.

How should I evaluate AI firewall vendors?

When comparing AI firewalls, look at the breadth of protection (does it cover prompts, agents, tools and APIs?), deployment model (is it inline with minimal latency and no code changes?), policy flexibility (can you define auditable guardrails?) and data protection (does it prevent data leakage without sending content to third parties?). Also consider ease of rollout, integration with existing workflows, and the vendor’s experience with enterprise use cases. Levo’s AI Firewall is designed for enterprise in-house AI: it deploys within your environment, offers customizable guardrails, enforces policies inline, and integrates with the broader Levo platform for runtime visibility, detection and governance.

What is the ROI of investing in an AI firewall?

The return on investment comes from faster, safer AI deployment, reduced incident costs and improved compliance posture. An AI firewall reduces the need for manual reviews, minimizes the risk of data leaks or unauthorized actions and speeds up approvals, allowing new AI features to reach users sooner. It also prevents fines and reputational damage from AI misuse. Levo’s AI Firewall can lower infrastructure costs by processing data locally (no egress fees), cut operational overhead through unified controls and help AI initiatives generate revenue faster, turning security from a cost center into a growth enabler.

What are the risks of deploying an AI firewall?

Most AI firewalls introduce two common risks in production: latency and vendor exposure. Latency shows up when traffic is routed through external gateways or heavy inspection layers that sit outside the application path. Vendor exposure appears when prompts, responses, and sensitive context are sent to third party systems for analysis: creating new security, privacy, and compliance concerns. Levo’s AI Firewall is designed to avoid both. It runs inline with sub-millisecond enforcement and keeps sensitive data within the enterprise environment, eliminating unnecessary routing and reducing third-party risk while still providing end to end protection for custom AI.

Can an AI firewall work without zero data egress?

Yes. AI security can be implemented inside the enterprise environment so prompts and responses are inspected locally. This reduces third-party exposure, supports stricter data residency requirements, and lowers the operational risk of moving sensitive information outside controlled boundaries. Levo’s AI Firewall is built for zero sensitive data egress, enabling strong protection without introducing third-party security and privacy risks.

How does an AI firewall protect AI agents that use tools and APIs?

AI agents can do more than generate text: they can call tools, trigger workflows, and invoke APIs. Securing agents requires controlling what actions are allowed, limiting what data can be accessed, and preventing unsafe or unintended tool usage. The right firewall enforces these rules in real time, not after the fact. Levo’s AI Firewall adds agent tool-use policy enforcement across tools and APIs so agentic AI can be expanded safely beyond pilots.

How do companies secure LLM API endpoints and AI APIs?

AI APIs are frequently exposed to internal teams, partners, and applications, which increases the risk of misuse, data leakage, and abuse at scale. Securing them typically requires monitoring requests and responses, applying guardrails, and enforcing policy consistently across all endpoints and routes, especially where AI connects to downstream services. Levo’s AI Firewall provides end-to-end transaction visibility and inline enforcement across prompts, tools, and APIs, so AI endpoints can be secured without slowing delivery.

What is the best way to prevent data leakage in generative AI applications?

Preventing leakage starts with identifying sensitive data and ensuring it does not appear in outputs or flow into unsafe systems. Effective approaches include redaction, masking, policy-based blocking, and audit trails that show what data was accessed and returned. This is especially important for in-house AI that touches proprietary knowledge and regulated data. Levo’s AI Firewall includes DLP for AI, applying inline data protection to prompts and responses so production rollouts can move faster without increasing exposure.

How do companies protect system prompts and internal AI instructions?

System prompts often contain proprietary instructions that define how an in-house AI behaves. If exposed, they can reveal business logic, safety constraints, and sensitive operating details: creating both security and IP risk. Strong protection requires detecting extraction attempts and preventing these instructions from being disclosed. Levo’s AI Firewall includes system prompt leakage protection, helping keep proprietary AI behavior private while enabling broader adoption of advanced assistants.

How do companies secure AI tool integrations like MCP servers?

As AI systems connect to enterprise tools, connectors become high value control points. Security needs to ensure integrations do not become pathways for data leakage, unsafe actions, or uncontrolled access, especially when agents are invoking tools dynamically. A modern AI firewall secures these integrations at runtime, not just through configuration checklists. Levo’s AI Firewall extends protection to MCP-style tool integrations, enforcing policy and monitoring behavior so connector adoption scales without creating new attack surfaces.

What is the process of deploying an AI firewall, and how can enterprises get started?

Enterprises typically start by identifying where AI enters the organization: customer-facing AI features, internal copilots, and agent workflows, and then choosing a deployment approach. The most practical rollout follows three steps: visibility first (observe AI behavior and flows), guardrails next (define what “safe” means for data and actions), and enforcement last (progressively move from monitor to alert to block). The goal is to secure production without breaking user experience or slowing delivery. Levo supports this exact adoption path. It deploys with minimal disruption, provides end-to-end visibility across prompts, agents, tools, and APIs, and enables progressive enforcement so teams can start safely, prove value quickly, and scale AI security as AI usage expands.

Show more