AI Agent Security Platform for AI leadership

%20(1).png)

%20(1).png)

AI Agent Security Platform for Rapid,

Secure AI Agent Adoption

Runtime Visibility Across the AI Control Plane

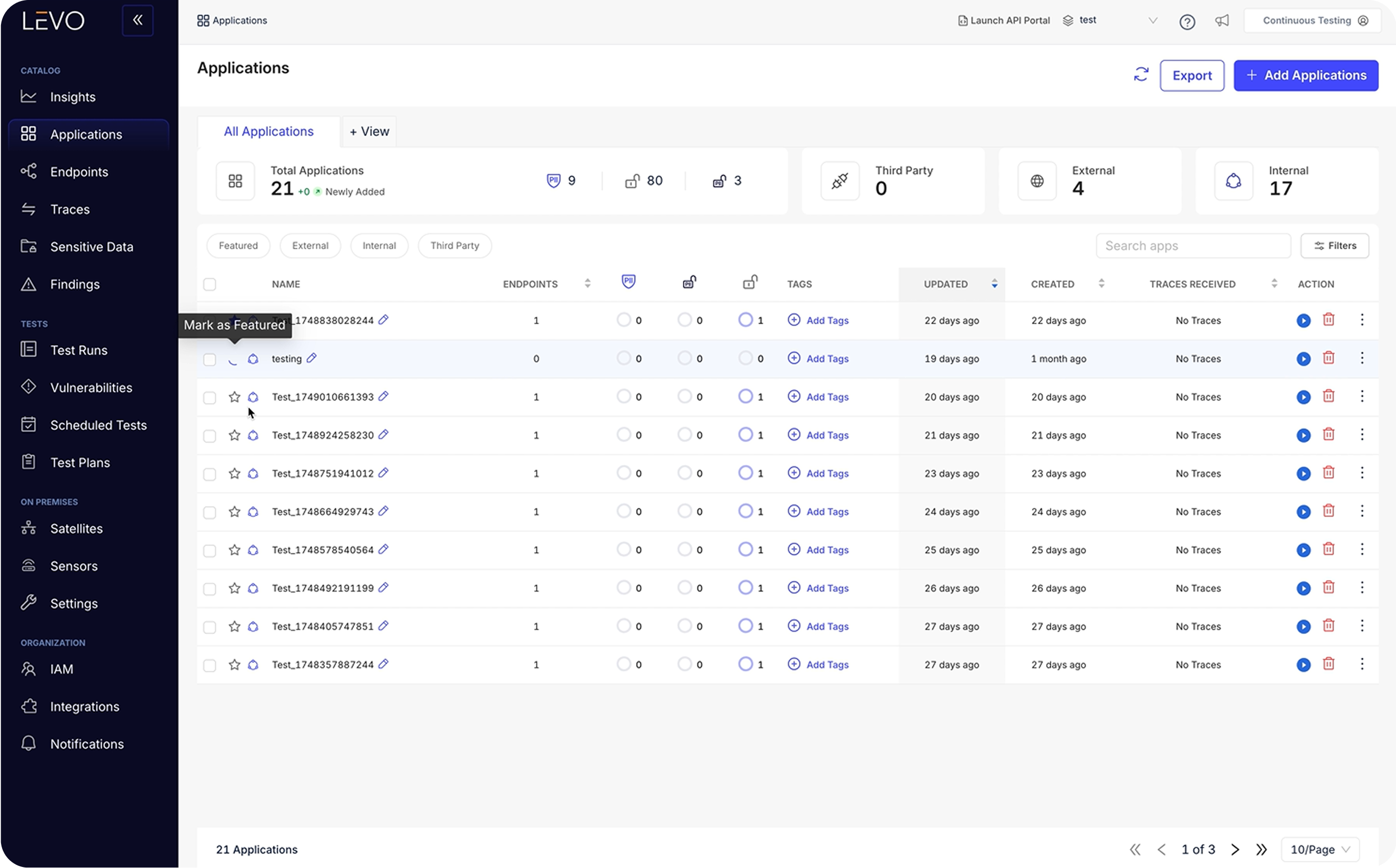

Levo maps every internal and third-party AI asset in use: agents, APIs, LLMs and traces data flows in real time. This eliminates shadow AI and anchors security in reality, not assumptions.

Precision Detection of Agent Threats

Levo detects unauthorized access, identity drift, session sprawl, data misuse, and regulatory violations. Security teams get targeted alerts tied to real runtime risk, not noise.

Real-Time Enforcement That Blocks Abuse

Levo blocks agents from overstepping access boundaries, leaking data, or chaining into risky actions. Identity-based and context-aware policies stop exploits before they spread.

Adversarial Red Teaming with Real Application Context

Levo simulates prompt injections, data leaks, privilege escalation, and agent chaining under real-world conditions. This validates defenses before deployment and closes gaps legacy testing misses.

.png)

End-to-End Monitoring for Risk and Policy Gaps

Levo continuously scores risk, enforces policy-as-code, and monitors agent-to-agent interactions. Security teams gain clarity, accountability, and audit-ready evidence without slowing AI innovation.

.png)

AI Agent Platform Built for the Realities of Enterprise Teams

Engineering leaders can safely bring AI into the product and development process. With Levo, agents can code, document, and automate workflows securely, accelerating delivery without creating downstream risk.

Security teams get complete oversight and control of AI agents in production without needing to scale headcount. Levo surfaces risk, enforces policies, and blocks abuse in real time, so lean teams can govern at scale.

Compliance teams can stay ahead of emerging regulations like the AI Act and NIST AI RMF. Levo enforces data residency, auditability, and role accountability across every agent workflow.

Secure AI Agents with Levo. Empower Automation, Not Attackers

Frequently Asked Questions

Got questions? Go through the FAQs or get in touch with our team!

What is AI agent security and why does it matter?

AI agent security refers to the systems and safeguards put in place to protect AI agents from misuse, compromise, or misalignment with enterprise goals. As agents gain the ability to act autonomously (making API calls, retrieving data, or even initiating transactions), they introduce a new, machine-speed attack surface. A single compromised agent can behave like a rogue insider, leaking sensitive data or performing unauthorized actions.

Why are traditional security tools insufficient for AI agents?

Traditional tools focus on static analysis, human identity, or predictable application behavior. AI agents, by contrast, are dynamic, unpredictable, and often operate under non-human identities. Legacy IAM and perimeter defenses struggle to track their behavior, making them ill-equipped to prevent prompt injections, drift, or data exfiltration by autonomous agents.

What are the main risks of deploying AI agents in enterprise environments?

Key risks associated with deploying AI agents include: prompt injection attacks, unauthorized access and privilege escalation, shadow AI (unsanctioned agents), data leakage (PII, PHI, IP), rogue agent behavior due to misalignment or emergent actions. These risks escalate with the scale and autonomy of agents in production

How do prompt injections compromise AI agents?

Prompt injection occurs when malicious input alters the agent’s intended behavior. This can result in agents leaking confidential data, executing unsafe actions, or being redirected toward unauthorized tasks. OWASP has flagged real-world CVEs involving agents manipulated via prompt injection.

Can AI agents expose sensitive data or IP?

Yes. Agents often interface with internal databases, wikis, or code repositories. If compromised, they can leak secrets like API keys, credentials, or regulated data. Since they act autonomously, such leaks can occur at scale and go undetected without proper observability.

How does Levo help detect threats from AI agents?

Levo identifies suspicious behavior using real-time runtime telemetry. It detects: agent-resource and identity-agent mismatches, session drift (unexpected tool changes), sensitive data exposure attempts (AI DLP), region/vendor violations.

Each detection is context-aware and mapped back to the enterprise policy to stop emerging threats early.What blocking capabilities does Levo offer for AI agents?

Levo enforces real-time blocking via: Identity-based runtime validation, resource and vendor access enforcement, agent-to-agent communication controls, AI DLP for sensitive data redaction, adaptive blocking tuned to context and session risk. This enables surgical enforcement without halting business operations.

How does Levo monitor AI agent activity at runtime?

Levo gives end-to-end observability across the AI control plane, including agents, APIs, and LLMs. It tracks token flows, agent interactions, tool use, and data access in real time. This also includes tracing multi-agent chains, surfacing shadow integrations, and scoring workflows for operational and compliance risk.

How does Levo enforce compliance for AI agents (HIPAA, GDPR, etc.)?

Levo supports enterprise-grade audit logging, data residency enforcement, and AI DLP to ensure no regulated data leaves secure environments. It applies policy-as-code guardrails (e.g., "no PHI may leave this domain") and creates traceable records of agent actions for audit and legal reviews.

Can Levo secure custom agents and third-party tools alike?

Yes. Levo covers in-house, open-source, and SaaS-based agent architectures, tracing activity across fine-tuned LLMs, orchestration layers, and external APIs. This makes it agnostic to agent origin and adaptable across hybrid environments.

Why is runtime visibility critical for agent security?

Unlike traditional applications, agents act dynamically, changing behavior with each session. Runtime visibility into token flows, tool calls, and identity mappings ensures organizations can detect drift, validate actions, and block anomalous behavior before damage is done.

How does AI agent red teaming work?

Red teaming simulates adversarial attacks on agents (using prompt injection, fuzzing, rate abuse, or privilege chaining) to validate resilience before production deployment. Levo enables such tests with runtime awareness, ensuring security is grounded in the agent’s actual behavior rather than theoretical assumptions.

What are best practices for securing AI agents in production?

Experts recommend:

* Zero-trust runtime enforcement

* Prompt sanitization and output filtering

* End-to-end audit logging

* Agent manifest validation (approved actions only)

* Real-time anomaly detection and red teaming

These practices close the gap between security policy and agent behavior.How does Levo help teams go from pilot to production securely?

Levo eliminates common blockers like shadow agents, unapproved vendors, or compliance uncertainty by mapping and securing agent behavior in real time. This gives security and compliance teams the clarity to approve deployments faster without sacrificing control.

What’s the ROI of investing in AI agent security with Levo?

Securing AI agents accelerates adoption by removing friction from governance, reducing incident response costs, and ensuring stable, compliant automation. Organizations avoid delays, regulatory fines, and reputational damage while gaining confidence to scale agent-powered workflows safely and efficiently.

How is sensitive data protected?

Gateways and firewalls see prompts and outputs at the edge. Levo sees the runtime mesh inside the enterprise, including agent to agent, agent to MCP, and MCP to API chains where real risk lives.

How is this different from model firewalls or gateways?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

What operational insights do we get?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

Does Levo find shadow AI?

Yes. Levo surfaces unsanctioned agents, LLM calls, and third-party AI services, making blind adoption impossible to miss.

Which environments are supported?

Levo covers LLMs, MCP servers, agents, AI apps, and LLM apps across hybrid and multi cloud footprints.

What is Capability and Destination Mapping?

Levo catalogs agent tools, exposed schemas, and data destinations, translating opaque agent behavior into governable workflows and early warnings for risky data paths.

How does this help each team?

Engineering ships without added toil, Security replaces blind spots with full runtime traces and policy enforcement points, Compliance gets continuous evidence that controls work in production.

How does Runtime AI Visibility relate to the rest of Levo?

Visibility is the foundation. You can add AI Monitoring and Governance, AI Threat Detection, AI Attack Protection, and AI Red Teaming to enforce policies and continuously test with runtime truth.

Will this integrate with our existing stack?

Yes. Levo is designed to complement existing IAM, SIEM, data security, and cloud tooling, filling the runtime gaps those tools cannot see.

What problems does this prevent in practice?

Prompt and tool injection, over permissioned agents, PHI or PII leaks in prompts and embeddings, region or vendor violations, and cascades from unsafe chained actions.

How does this unlock faster AI adoption?

Levo provides the visibility, attribution, and audit grade evidence boards and regulators require, so CISOs can green light production and the business can scale AI with confidence.

What is the core value in one line?

Unlock AI ROI with rapid, secure rollouts in production, powered by runtime visibility across your entire AI control plane.

Show more