Levo’s AI Gateway to Govern Third-Party AI

%20(1).png)

%20(1).png)

Third-Party AI is simultaneously driving up everything: productivity gains

Third-party AI spreads through individual workflows, not enterprise rollouts. When adoption is personal and decentralized, leadership loses visibility into which tools are in use, who is using them, and what business processes they touch. That uncertainty slows scale decisions and increases exposure at the same time.

Employees share context to get better results, which often includes customer data, internal documents, code, or strategic information. Once that data is sent to an external AI tool, control over retention, reuse, and downstream access becomes unclear. What starts as productivity quickly becomes a security and compliance liability.

Regulators and auditors require evidence of controls, not intent. In decentralized AI usage, it is hard to prove what data was shared, which provider processed it, and which policies were applied. Without auditable records, approvals slow down and risk teams default to restriction.

When teams create accounts independently and share credentials, enforcement becomes inconsistent by design. “Approved AI” stays on paper while real usage moves elsewhere. The organization ends up with shadow spend, uneven controls, and bypass that grows under delivery pressure.

AI outputs are copied into customer responses, tickets, documents, and internal decisions. If outputs contain unsafe guidance, incorrect facts, or policy-violating content, the impact spreads quickly through downstream processes. This turns a model issue into a business risk issue.

Traditional controls were built for known applications and predictable traffic. They do not consistently understand prompts, identity context, provider selection, or AI-enabled SaaS workflows. Without a governance layer at the AI boundary, enterprises either accept unmanaged exposure or block the tools and lose the benefits.

Keep GenAI productivity high,

without attack surface expansion

Make usage visible and accountable

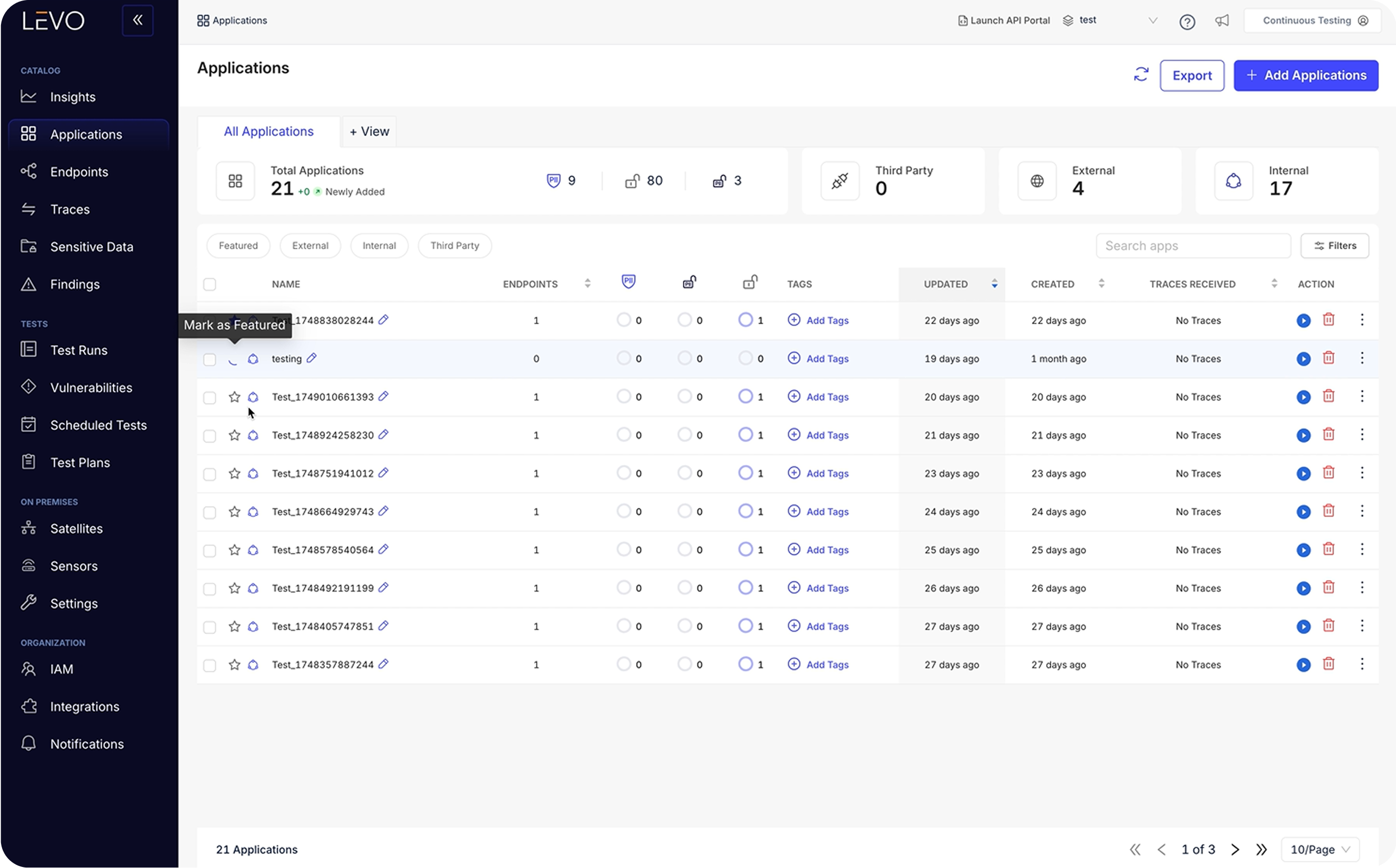

Levo discovers third-party AI usage across providers and AI-enabled SaaS to establish a real baseline before enforcement begins. It ties usage to identity and business context such as team, function, application, and environment. AI activity becomes governable, not just observed. Leadership gets clarity that enables faster, safer scale decisions.

Standardize approved AI with segmented enablement

Levo enforces approved tools and destinations while enabling segmented policy by business unit and region. This avoids blanket restrictions that kill adoption and permissive access that creates avoidable exposure. Governance becomes practical and repeatable, not a series of one-off exceptions. Teams get consistent access, and leadership gets consistent control.

Prevent sensitive data egress without slowing work

Levo inspects outbound prompts and attachments and applies policy actions so sensitive data does not leave as AI context. Governance is applied centrally, so coverage does not depend on slow device rollouts or inconsistent user behavior. Security teams reduce leakage risk without becoming the productivity bottleneck. Adoption scales because the guardrails do not break workflows.

Replace fragmented keys with enforceable access control

Levo centralizes provider credentials so teams do not need unmanaged accounts and scattered tokens. Access becomes standardized, auditable, and enforceable by design. This reduces shadow spend and removes a common failure mode in third-party AI usage. Procurement and security gain control without slowing daily usage.

.png)

Keep spend predictable as usage scales

AI usage expands quickly, and costs expand with it. Levo applies quotas and usage controls to prevent runaway consumption and to make spend measurable and attributable across teams. This protects ROI while enabling leadership to expand adoption with confidence. The business keeps the productivity upside without budget volatility.

.png)

The value of governed third-party AI spans every enterprise team

Adopt AI-assisted coding and workflow acceleration at scale without constant security escalations and rollbacks. Governance is applied centrally, so teams keep shipping speed while avoiding last-minute rework.

Reduce shadow AI sprawl, prevent sensitive data leakage, and enforce approved tools with customizable policies that reflect real business risk. Control scales with adoption instead of slowing it down.

Turn AI approvals into a repeatable process with auditable evidence and policies aligned to regional requirements. Sensitive data controls can match jurisdictional boundaries without blocking adoption.

Enable third-party AI at scale without trading away control

Frequently Asked Questions

Got questions? Go through the FAQs or get in touch with our team!

What is an AI gateway in enterprise AI security?

An AI gateway is a governance layer that sits between employees or internal systems and third party AI tools. It provides centralized control over which tools can be used, what data can be sent, and what policies must be enforced. In practice, it turns AI usage from an unmanaged behavior into an operational program with clear accountability. This matters because third party AI adoption spreads faster than traditional security teams can review or approve it manually. A strong gateway also creates visibility into usage patterns so policies can be tuned based on real behavior rather than assumptions. Levo’s AI Gateway delivers this governance with centralized visibility, enforceable controls, and audit ready evidence built in.

Why do enterprises need to govern third-party AI?

Third-party AI is now embedded in everyday work, which means usage scales before any centralized program can keep up. Without governance, risk becomes structural: sensitive context leaves the enterprise, tool usage becomes unaccountable, and policies become optional in practice. Governance turns adoption into an operating model that can scale, because it makes usage visible, enforceable, and provable across teams and regions. It also prevents the two common failure outcomes: blanket bans that kill productivity, or permissive adoption that leads to incidents. Levo’s AI Gateway enables governed adoption so productivity compounds while security and compliance stay controlled and defensible.

What is shadow AI, and why is it risky for businesses?

Shadow AI is employee or team usage of AI tools that are not approved, monitored, or governed. It creates blind spots, inconsistent controls, and a high risk of sensitive data being shared externally. It also fragments spend and accountability, making it difficult to understand how AI is being used and where the largest risks sit. Shadow AI grows quickly because the path of least resistance is often outside formal procurement and security processes. When leadership cannot measure usage, it cannot govern it, and that is why bans often appear attractive but fail in practice. Levo’s AI Gateway surfaces shadow usage and converts it into governed usage with identity-aware, enforceable controls.

How do companies prevent employees from sharing sensitive data with AI tools?

Prevention requires enforcement at the point where prompts and attachments leave the enterprise, not just policy training. Effective approaches inspect outbound data, apply redaction or blocking rules, and maintain logs for audit and incident response. Without enforcement, leakage becomes inevitable under time pressure because employees will share context to get better outputs. Good governance also needs policy segmentation, since different teams and regions have different regulatory constraints. The goal is not to stop AI usage, but to keep AI useful while preventing confidential context from leaving the boundary. Levo’s AI Gateway applies AI-aware outbound controls and policy enforcement centrally, so sensitive data stays protected without disrupting workflows.

How can organizations enforce approved AI tools and providers?

Enforcement requires a centralized layer that can allow approved destinations and block unapproved tools across AI chat and AI enabled SaaS. Without that layer, “approved AI” remains a guideline rather than a control, and adoption naturally fragments. Strong enforcement also needs identity and context so policies reflect real business risk and do not become overly restrictive. Many organizations also need credential governance so usage does not depend on unmanaged accounts and scattered tokens. When provider governance is enforceable, security and procurement stop being the bottleneck for productivity. Levo’s AI Gateway enforces approved providers and destinations while supporting segmented policies that reflect enterprise risk realities.

What compliance risks come with third-party AI usage?

Key risks include uncontrolled sharing of regulated data, inability to prove controls, and inconsistent enforcement across teams and regions. Auditors typically ask for evidence that policies exist and that they are enforced consistently, not just written down. Without auditability, approvals stall, and the organization either slows adoption or accepts silent exposure. Compliance challenges also grow when AI is used across multiple tools, accounts, and SaaS workflows because evidence becomes scattered and incomplete. The safest programs make governance measurable, repeatable, and demonstrable across the organization. Levo’s AI Gateway produces audit-ready evidence and enforces policy so compliance teams can approve adoption with confidence.

How do enterprises manage the cost of third-party AI at scale?

Costs rise quickly when usage expands across teams and tools without guardrails. Strong cost governance includes usage controls, quotas, and attribution by team or function so spend maps to business value. Without this, AI budgets expand faster than measurable outcomes, which triggers leadership caution and slowdowns. Cost also becomes harder to manage when teams subscribe independently or use unmanaged accounts that bypass procurement visibility. The goal is to keep adoption broad while making spend predictable and defensible. Levo’s AI Gateway adds quotas, usage controls, and attribution so third-party AI spend stays measurable and controlled as adoption grows.

What are the risks of deploying an AI gateway?

Many AI gateways introduce workflow latency or vendor security concerns. If enforcement relies on remote callouts, or if prompts and responses are exported for analysis, trust drops and bypass grows. Another risk is operational friction, where deployment requires slow endpoint rollouts or heavy application changes, which delays adoption and weakens coverage. A successful gateway needs predictable performance, centralized control, and a rollout model that builds confidence before enforcement becomes strict. The right design keeps governance inside the enterprise boundary, so security posture improves without introducing new vendor risk. Levo’s AI Gateway is designed for predictable performance and keeps sensitive AI context within the customer environment, reducing both latency concerns and third-party exposure.

Do AI gateways protect against unsafe or inaccurate AI outputs?

Governance is incomplete if it only controls what goes out. Enterprises also need safeguards for what comes back because outputs can quickly enter documents, tickets, customer communications, and operational workflows. Without controls, errors can be propagated at scale, creating reputational and operational risk. Strong governance inspects responses, applies policy actions, and creates traceability so organizations can understand what was generated and why. This reduces the risk of “silent contamination” of business workflows with untrusted output. Levo’s AI Gateway supports response controls and traceability so AI outputs remain safe to use across enterprise workflows.

Can AI governance apply differently across teams and regions?

Enterprise risk is not uniform, and policy must reflect business context. Regulated functions often require tighter controls than low-risk use cases, and jurisdictions frequently impose different privacy and data handling requirements. If governance is one size fits all, it either blocks too much and slows adoption or allows too much and increases exposure. Segmented enforcement is what makes adoption scalable because it enables AI where it is appropriate and constrains it where it must be constrained. This is also how governance remains defensible in audits across regions and business units. Levo’s AI Gateway enables segmented policies by team, context, and region so governance aligns to real enterprise operating requirements.

What is the difference between an AI gateway and an AI Gateway?

An AI gateway governs third-party AI usage when employees or systems call external tools and models. An AI Gateway protects in-house AI applications end to end when an enterprise exposes AI features to users and customers. As adoption expands, both boundaries matter because third-party usage creates data egress and policy risk, while in-house AI creates runtime workflow and action risk. Many organizations start with a gateway to govern employee usage and then extend to firewall protections as they build customer facing AI. When both are covered, governance becomes end to end rather than fragmented. Levo supports both boundaries as part of a unified AI security platform, enabling a consistent governance story as AI expands.

How can enterprises get started with AI gateways?

The most reliable path starts with visibility and baseline usage without disrupting workflows, then moves into phased enforcement. This reduces false positives, builds internal trust, and aligns stakeholders early across IT, security, and compliance. Standardizing approved tools and audit evidence from day one prevents governance from becoming a retroactive cleanup project. Enterprises should also define what “safe usage” means by team, data type, and region so governance reflects real operating needs. A structured rollout is what prevents bypass and keeps adoption growing responsibly. Levo’s AI Gateway supports a discover, baseline, enforce rollout model and provides the controls and evidence needed to scale quickly and safely.

Do AI gateways require endpoint agents on employee devices?

Some approaches depend on endpoint agents, which slow rollouts and add operational burden across fleets. That friction often results in uneven coverage, delayed enforcement, and inconsistent governance across business units. Central governance is often preferred because it delivers immediate consistency without waiting for device deployment cycles. A gateway should scale with the organization, not with the number of endpoints managed. The best approach reduces dependency on user behavior and local installs. Levo’s AI Gateway is designed for centralized governance without endpoint agents, enabling faster coverage and faster time-to-control.

Do AI gateways add latency or degrade employee experience?

If a gateway becomes a bottleneck, employees bypass it and governance fails. Latency often appears when enforcement requires external round trips, heavy processing, or unreliable routing that interrupts normal workflows. Strong gateways keep enforcement predictable and efficient at high scale so user experience remains intact. The goal is to preserve the very productivity gains that justify AI adoption. When experience stays smooth, compliance increases naturally because bypass pressure drops. Levo’s AI Gateway is engineered for predictable performance so governance does not become a productivity tax.

How should enterprises evaluate whether an AI Gateway increases third-party risk?

When evaluating AI Gateway vendors, the key question is whether governance reduces enterprise risk or simply relocates it to another vendor. Many solutions require routing prompts, responses, or usage telemetry through external systems, which can introduce new data handling concerns, approval delays, and regulatory complications. A strong AI Gateway should preserve enterprise boundaries, support clear data residency expectations, and make it easy to prove what data is processed where. It should also provide controls that work at scale without creating new blind spots or new trust dependencies. Levo’s AI Gateway keeps governance within the customer environment and avoids exporting sensitive AI context, reducing third-party exposure while still enforcing enterprise policy.

What capabilities matter most when choosing an AI Gateway vendor?

Enterprises should prioritize capabilities that make governance scalable, not manual. The best AI Gateways support segmented policies by team, region, and use case, while keeping those policies consistent and auditable across the organization. They should make enforcement repeatable and adaptable as business needs change, without requiring constant human review for every exception. Evaluation should also consider how quickly controls can be rolled out, how easily they can be tuned, and whether the system produces audit-ready evidence by default. Levo’s AI Gateway supports customizable guardrails with centralized control so policies can evolve quickly without turning governance into operational overhead.

Show more