AI Security for Insurance

%20(1).png)

%20(1).png)

%20(1).png)

The Levo AI Security Platform

for Insurance

Runtime AI Visibility

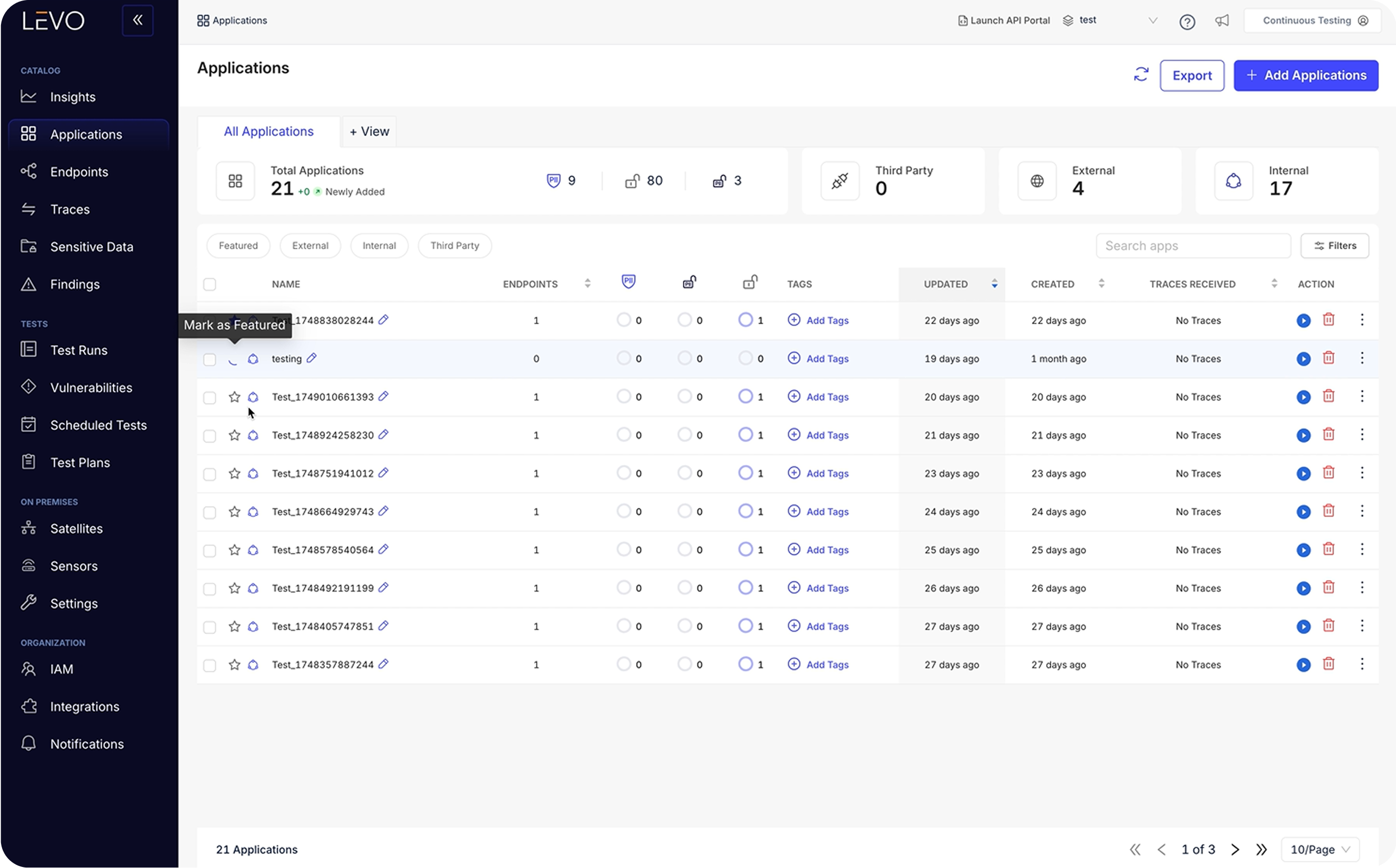

Gain comprehensive visibility across underwriting algorithms, claims automation pipelines, fraud analytics and customer‑facing AI. Discover every model, prompt, agent and vector store processing policyholder data, and see who is accessing what, when and why.

AI Monitoring & Governance

Continuously monitor sensitive data flows, model drift and fairness across your insurance AI estate. Define policy‑as‑code for PII/PHI handling and NAIC‑aligned rules, and produce audit‑ready evidence for regulators and internal risk committees.

AI Threat Detection

Detect prompt injection, data poisoning, unauthorized API calls and anomalous behaviors in real time. Receive instant alerts when AI chatbots hallucinate policy coverage, when fraud models behave unexpectedly or when employees use shadow AI.

AI Threat Protection

Enforce inline guardrails to redact PII/PHI, mask sensitive claims data and block malicious prompts before they reach your models. Prevent unauthorized changes to underwriting decisions or claims adjudication by isolating model contexts.

.png)

AI Red Teaming

Stress‑test your insurance AI systems before deployment. Simulate adversarial prompts, poisoned loss‑run documents and synthetic fraud claims to identify vulnerabilities. Apply learnings to strengthen defenses and satisfy regulatory requirements.

.png)

Built for the teams driving AI transformation across insurance.

Launch AI‑powered underwriting, claims and fraud initiatives faster without building custom security plumbing. Levo’s plug‑and‑play platform abstracts away data masking, prompt filtering and audit logging so your teams can focus on innovation.

Gain visibility across all AI models, agents and third‑party vendors. Define and enforce guardrails to stop data leaks and prompt injection, and investigate incidents with full audit trails, all while meeting headcount and budget constraints.

Prove fairness, privacy and compliance to regulators and auditors. Levo delivers policy‑as‑code governance, evidence of model lineage and runtime monitoring aligned with NAIC, HIPAA, GDPR and state guidelines, reducing regulatory risk and audit fatigue.

Scale in Insurance AI, without risking trust or compliance.

Frequently Asked Questions

Got questions? Go through the FAQs or get in touch with our team!

What is AI Security for Insurance?

AI Security for insurance refers to the practices and technologies that protect generative and analytical AI systems, used in underwriting, claims, fraud detection and customer service, from unique risks such as data leakage, prompt injection, hallucinations, model poisoning and shadow AI. Unlike traditional cybersecurity, which defends deterministic systems, AI security must monitor model behavior, prompts and outputs in real time and enforce data‑handling policies to safeguard sensitive PII/PHI.

Why do underwriting, claims and fraud AI need security?

AI dramatically accelerates underwriting accuracy, streamlines claims processing and strengthens fraud detection. However, these systems process sensitive policyholder data and make high‑impact decisions; misconfigurations or attacks can expose personal information, hallucinate non‑existent policy clauses or let adversaries manipulate outcomes. Securing the AI layer ensures you reap the efficiency gains without incurring customer harm or regulatory backlash.

What specific risks do insurers face with generative AI?

Key risks include:

Data leaks: Prompts or model outputs inadvertently exposing policyholder PII/PHI

Hallucinations and bias: LLMs fabricating reasons for claim denials or producing discriminatory pricing

Prompt injection and adversarial inputs: attackers hiding malicious instructions in claims documents or emails, causing data exfiltration or unauthorized policy changes

Model leakage, poisoning and drift: stealing model weights, corrupting training data or letting performance degrade over time

Shadow AI and vendor risk: unsanctioned use of third‑party AI tools or insecure vendors that bypass corporate controlsWhy aren’t traditional cybersecurity tools enough?

Classic insurance cybersecurity tools (WAFs, DLP, IAM) protect networks and endpoints but can’t inspect dynamic prompts, vector embeddings or model outputs. Generative and agentic AI introduce a novel attack surface: prompts act as code, models retain training data, and outputs can hallucinate sensitive information. AI security platforms provide runtime visibility, prompt filtering, data masking and model‑behavior monitoring that legacy tools lack.

How does AI security help with compliance and regulation?

Regulators such as the NAIC, New York Department of Financial Services and state privacy laws require insurers to safeguard sensitive data, ensure model fairness and maintain detailed audit trails. AI security platforms enforce policies (e.g., no PII in training data), monitor model decisions for bias, record every AI action and align with standards like HIPAA, GDPR and CCPA. This reduces the risk of penalties, litigation and reputational damage.

What’s the ROI of investing in AI Security for insurers?

AI itself cuts costs and boosts revenue by improving fraud detection, speeding claims and enhancing underwriting accuracy. AI security protects those gains: it prevents data breaches and regulatory fines, reduces false positives/negatives in fraud models, preserves customer trust and accelerates AI approvals. Securing AI allows insurers to innovate faster while maintaining a competitive loss ratio and meeting compliance obligations.

What is the difference between general insurance cybersecurity and AI Security for Insurance?

Traditional insurance cybersecurity protects networks, applications and data, but cannot interpret prompts, model outputs, embeddings or AI-driven decisions.

AI Security for Insurance adds model-aware controls that detect prompt injection, stop policyholder data leakage, validate AI-generated decisions and secure underwriting, claims and fraud models in real time.Why do underwriting and pricing models need AI-specific security controls?

Underwriting AI processes extremely sensitive PII/PHI, medical histories, financial data, telematics inputs and third-party risk signals. Without AI-layer security, errors, bias, hallucinated explanations or adversarial manipulation can lead to unfair pricing, regulatory reviews and higher loss ratios.

How can insurers prevent AI from leaking policyholder data or PHI?

Insurers need runtime monitoring, prompt/output filtering, redaction and policy-as-code controls that block PII/PHI from entering prompts, embeddings or responses. Levo’s AI Threat Protection enforces these controls automatically across claims AI, underwriting models and customer-facing LLMs.

How does AI security help insurers stay compliant with NAIC, state insurance regulations and privacy laws?

AI security platforms generate the audit trails, model-level visibility, access logs and decision explanations regulators increasingly expect. Insurers use Levo to demonstrate responsible AI use, enforce guardrails and prevent non-compliant data handling across underwriting, claims, fraud and support workflows.

What AI threats are fraud analytics teams facing today?

Fraud rings increasingly use adversarial inputs, deepfake documents, synthetic IDs and prompt injection to bypass AI-based fraud models. Levo detects abnormal behavior, poisoned signals, suspicious document patterns and model drift that could weaken fraud detection accuracy over time.

How can insurers secure LLM-based customer service bots and claims assistants?

Customer-facing LLMs are vulnerable to prompt injection, misinformation, hallucinated policy guidance and data exposure. Levo applies real-time monitoring and guardrails to ensure AI agents provide accurate, compliant and safe coverage advice across every interaction.

When should insurers introduce AI Security during the AI lifecycle?

AI security should be implemented before models go into production, during experimentation, tuning and integration phases. However, Levo can also secure systems already in production by providing visibility, enforcement and red-teaming without rebuilding your stack.

What is the ROI of investing in AI Security for insurance carriers?

AI security reduces regulatory risk, prevents costly data-leak incidents, lowers fraud losses and accelerates approval for new AI initiatives. Insurers with Levo ship AI faster, reduce manual oversight costs and maintain strong policyholder trust as they scale automation.

How does AI Security help with third-party InsurTech and vendor risk?

Many carriers rely on external AI vendors for OCR, fraud scoring, claims automation, or chatbots, but lack visibility into what these systems actually do. Levo provides centralized monitoring, access governance and policy enforcement across third-party AI tools, reducing supply chain exposure.

What steps should insurers take to securely scale AI across underwriting, claims and fraud?

Begin with model inventory and visibility, set policies for PII/PHI handling, implement runtime guardrails, continuously monitor for drift and conduct ongoing AI red-teaming. Levo consolidates all of these requirements into one platform so insurers can scale AI responsibly without slowing innovation.

How is sensitive data protected?

Gateways and firewalls see prompts and outputs at the edge. Levo sees the runtime mesh inside the enterprise, including agent to agent, agent to MCP, and MCP to API chains where real risk lives.

How is this different from model firewalls or gateways?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

What operational insights do we get?

Live health and cost views by model and agent, latency and error rates, spend tracking, and detections for loops, retries, and runaway tasks to prevent outages and control costs.

Does Levo find shadow AI?

Yes. Levo surfaces unsanctioned agents, LLM calls, and third-party AI services, making blind adoption impossible to miss.

Which environments are supported?

Levo covers LLMs, MCP servers, agents, AI apps, and LLM apps across hybrid and multi cloud footprints.

What is Capability and Destination Mapping?

Levo catalogs agent tools, exposed schemas, and data destinations, translating opaque agent behavior into governable workflows and early warnings for risky data paths.

How does this help each team?

Engineering ships without added toil, Security replaces blind spots with full runtime traces and policy enforcement points, Compliance gets continuous evidence that controls work in production.

How does Runtime AI Visibility relate to the rest of Levo?

Visibility is the foundation. You can add AI Monitoring and Governance, AI Threat Detection, AI Attack Protection, and AI Red Teaming to enforce policies and continuously test with runtime truth.

Will this integrate with our existing stack?

Yes. Levo is designed to complement existing IAM, SIEM, data security, and cloud tooling, filling the runtime gaps those tools cannot see.

What problems does this prevent in practice?

Prompt and tool injection, over permissioned agents, PHI or PII leaks in prompts and embeddings, region or vendor violations, and cascades from unsafe chained actions.

How does this unlock faster AI adoption?

Levo provides the visibility, attribution, and audit grade evidence boards and regulators require, so CISOs can green light production and the business can scale AI with confidence.

What is the core value in one line?

Unlock AI ROI with rapid, secure rollouts in production, powered by runtime visibility across your entire AI control plane.

Show more

.svg)