TL;DR

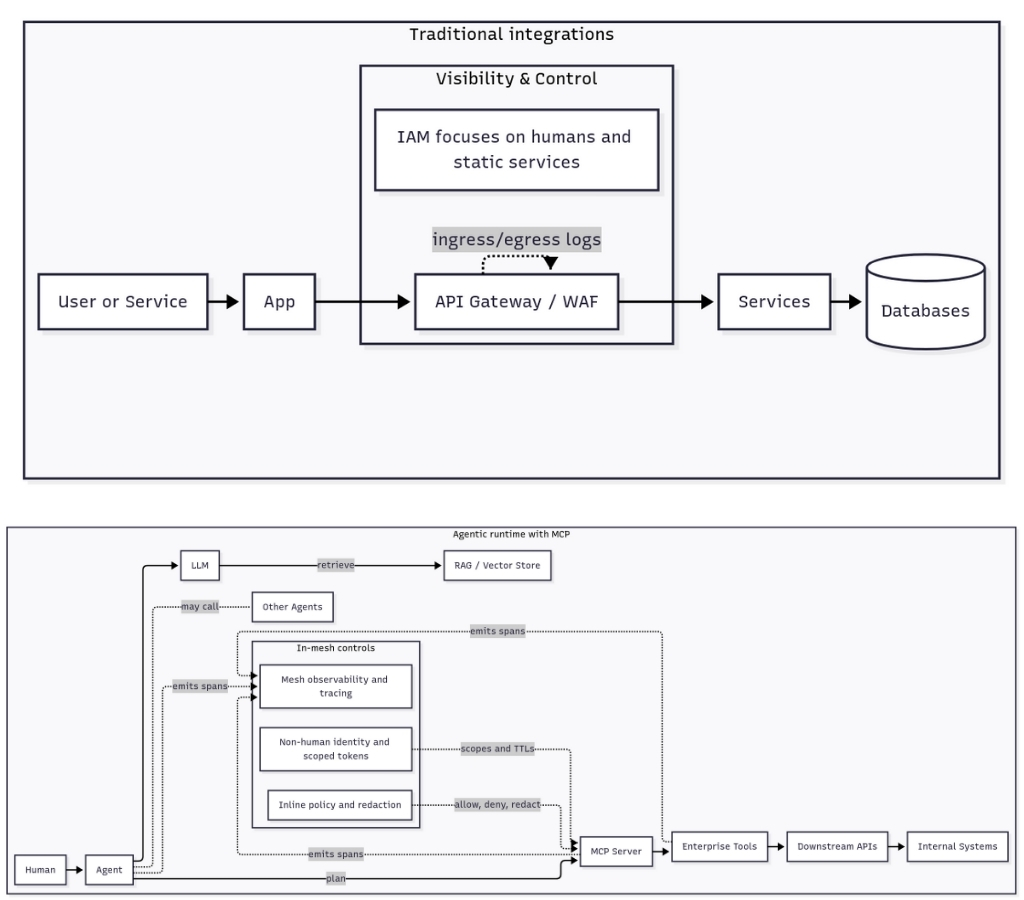

Traditional integrations assumed deterministic code and edge visibility. Agents changed that. MCP technology exposes tools and data to agents as callable resources that can be orchestrated by natural-language plans. An MCP server is the runtime broker that turns an agent’s intent into concrete tool calls and downstream API actions. This delivers speed and power, and it also creates a new security surface inside the mesh that legacy controls cannot see.

What is the traditional technology

Before agents, teams integrated systems with a stack like this:

- REST and gRPC APIs - rigid inputs, deterministic outputs.

- API gateways and service meshes - edge routing, rate limits, auth headers, TLS, basic transformations.

- SDKs, webhooks, ETL, RPA - predefined verbs and scripts that move or mutate data in predictable ways.

- Traditional security assumptions

- IAM expects humans and static services, not non-human actors that plan and act.

- Testing expects deterministic code paths, not black-box reasoning or chained tool use.

- Visibility lives at ingress or egress, mid-flow is largely invisible.

- Data security centers on storage and static DLP, not prompts, embeddings, or generated outputs.

This model worked because applications were procedural and interactions were short, structured, and predictable.

Why the need for MCP

Software shifted from “APIs as dumb pipes” to agents and toolchains that accept goals in natural language, then plan across many tools. Three changes created the need for MCP:

- Higher-order abstraction

Before: supply record IDs and params.

After: state intent like “tag all BFSI customers” and let the system decide which records and which calls.

Power rises, and so do risks of misclassification, prompt trickery, and unintended mass updates. - Chained interactions

Before: user → app → API, done.

After: human → agent → agent → MCP → downstream APIs, sometimes recursively. Long, opaque chains appear inside the runtime. - Natural language interfaces

Before: structured payloads acted like guardrails.

After: prompts and tool descriptions become the interface, so exploitation becomes semantic and social, not just code-based.

Enter MCP: a standard way to expose tools and data to agents so they can act with context and consistency, instead of every team inventing an ad-hoc plugin system.

What is MCP technology

MCP is the technology layer that bridges language and action:

- It exposes tools and data as callable resources that agents can discover and invoke.

- It unifies tools and prompts behind APIs, so agents can plan multi-step work without brittle glue code.

- It operates inside the AI runtime alongside the LLM, embeddings, and RAG components. In practice the flow looks like:

trigger → agent → LLM → RAG or embeddings → MCP tools → downstream APIs → outputs.

Remove any link in that chain and agentic workflows stall. Remove APIs and the whole architecture collapses. MCP is the connective tissue that lets agents turn goals into real system changes.

What is an MCP server

An MCP server is a concrete implementation of that technology. It runs as a broker that:

- Publishes a catalog of tools and data an agent can use.

- Accepts the agent’s intent and parameters and turns them into specific tool calls.

- Chains those calls across downstream APIs, vector stores, and internal systems.

- Returns results and intermediate state back to the agent as the plan executes.

Think of an MCP server as the runtime foreman for agent work. The agent sets the goal, the MCP server figures out which tools to use and how to sequence them.

MCP server vs traditional technologies

Advantages of MCP servers

- Speed to capability - expose a tool once, make it usable by many agents and apps without custom glue.

- Higher-order automation - let agents compose multi-step workflows that would be brittle in scripts.

- Unified tool catalog - a consistent way to discover, describe, and invoke enterprise tools and data.

- Context-aware execution - plans can combine RAG context, embeddings, and prior steps to choose the right action.

- Platform leverage - centralize governance, quality, and safety for tool use instead of duplicating per app.

Disadvantages and risks to plan for

- Privilege sprawl at machine speed - a broadly badged agent can perform wide actions very quickly.

- Opaque call graphs - long chains across agents, MCPs, and APIs are hard to reconstruct without mesh-level tracing.

- Semantic attack surface - natural-language plans amplify prompt injection and tool misuse.

- Data leakage in motion - sensitive data moves through prompts, embeddings, RAG, and tool outputs, not just storage.

- Edge blind spots - gateways and SDKs at the perimeter cannot see mid-stream agent ↔ MCP ↔ API flows.

- Audit gaps - boards and regulators will ask who acted, on whose behalf, with which decision and data. You need signed, immutable traces.

What “good” looks like for MCP and agents

Use a five-part controls model

- Visibility - build an AI bill of materials for apps, agents, MCP servers, LLMs, vector stores, and external APIs. Auto-discover shadow assets and ownership.

- Monitoring - observe the runtime mesh. Reconstruct agent ↔ agent, agent ↔ MCP, and MCP ↔ API traces with correct attribution to non-human identities.

- Detection - classify PHI and PII in prompts, embeddings, RAG, and tool outputs. Detect over-scoped authorities and policy violations in real time.

- Blocking - enforce inline guardrails in the mesh. Redact sensitive fields, allow or deny risky actions, and kill sessions when drift or injection is detected.

- Testing - shift left with continuous, exploit-aware tests that simulate prompt injection, collusion, and plugin misuse. Tie tests to live runtime truths.

Governance should move from ticket-time approvals to call-time decisions. Scope privileges by tool and purpose, time-box sessions, and produce signed evidence by default.

Hands-on, set up an MCP server

Choose an SDK

- Python: FastMCP, a small framework for Python servers.

- TypeScript or Node: official

@modelcontextprotocol/sdkwith stdio or HTTP transports.

Scaffold a minimal server

Option A, Python, “Notes” server

Install:

server.py

Run:

Option B, Node, “CRM tags” server

Init:

server.mjs

Run:

Register your server with a host, quick configs

MCP is configured per host or client, not per base model. The examples below are the most widely used.

Claude Desktop

Create or edit claude_desktop_config.json

- macOS:

~/Library/Application Support/Claude/claude_desktop_config.json - Windows:

%APPDATA%\Claude\claude_desktop_config.json

Restart Claude Desktop and approve the tools. Use absolute paths. Check the Developer settings and logs if the server does not appear.

VS Code with GitHub Copilot Chat, Agent mode

User-wide or workspace config supports MCP, including dev containers. Follow the Copilot MCP docs and add your servers, or configure inside devcontainer.json for portable workspaces.

Continue (VS Code and JetBrains)

YAML example:

Continue supports YAML and JSON, and a simple mcpServers block. (Continue)

Cursor

Cursor documents native MCP support and stdio servers. Register via their documented flows or extension API.

Cline

Open Advanced MCP settings and add entries to cline_mcp_settings.json for each local server.

Smoke test

Ask your host to list tools, then try:

Security blueprint you can apply

Auth and authorization

- OAuth 2.0 or similar with scoped tokens per agent and per MCP, short TTLs, least privilege per tool, explicit impersonation rules for delegation.

- PKCE for public clients, Dynamic Client Registration for bring-your-own agents.

- Signed, append-only audit logs for every tool call, include requestor identity and scope.

Policy

- Purpose-scoped roles per tool, time-boxed sessions, just-in-time elevation with approvals, deny by default for tool chains that cross regulated boundaries.

Data

- Secrets in a vault or env, no secrets in configs, redact prompts and tool outputs in transit, field-level masking on export.

Change

- Version tool contracts, require pull requests for new tools or expanded scopes, run drift checks on catalogs.

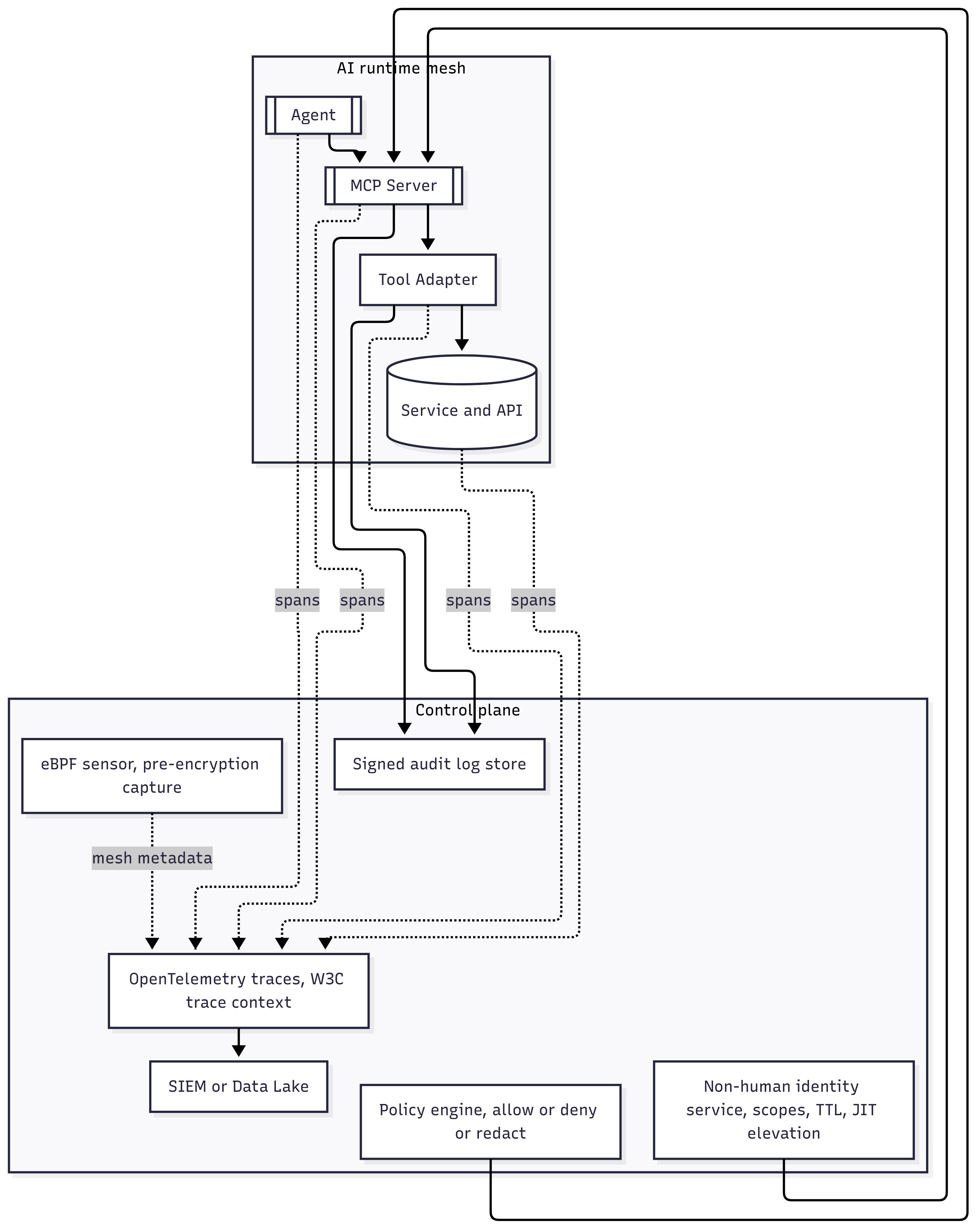

Observability pattern that proves control

Instrument mesh-level traces, not only edges.

- OpenTelemetry spans for

agent → MCP → downstream API, carry W3C trace context, add attributes likeactor.idandactor.typefor non-humans,tool.name,scope.grants,pii.count. - Export to your SIEM or lake with a fixed retention policy, attach signed evidence blobs for audits.

- For host troubleshooting, use Claude Desktop’s config and logs, and Copilot’s MCP settings.

SRE checklist for production

- Concurrency caps per agent and per tool, backpressure, circuit breakers.

- Timeouts and retries with exponential backoff, idempotency keys.

- Rate limits by identity and scope, budgeted tool execution in time and tokens.

- Health checks, structured logs, crash-only process runners.

- Cost guards for high-frequency tools and LLM sampling zones.

Threat model and controls

Practical payload examples

Tag BFSI customers

Rotate keys

Export DSR report

Broadened comparison table

How Levo can help

- Complete runtime visibility - eBPF sensors at the OS and kernel capture traffic before full encryption, and attribute every call to the right app, agent, or MCP. This is how you trace agent ↔ MCP ↔ API flows that edge tools and SDKs miss.

- Identity-first governance for non-humans - treat agents and MCP servers as first-class identities. Record signed decisions and actions, and manage everything in one control plane.

- Inline guardrails - redact sensitive data in transit, enforce region and vendor policy, prevent unauthorized delegation, and end rogue sessions in real time with low overhead.

- Continuous, AI-aware testing - automate exploit-aware suites based on live context, wire them into CI or CD so every release ships with runtime-informed assurance.

- Privacy-preserving, cost-efficient by design - compute stays local, only scrubbed metadata leaves your environment, and overhead stays minimal so regulated teams can adopt without latency tax.

Related: see the Levo MCP server use case for enterprise security patterns and deployment considerations: Levo MCP server.

Future outlook

- OS-level support is growing. Windows announced first-party MCP support to wire AI apps into core system features while gating risk. Expect similar moves across platforms, with controlled registries and stronger consent flows.

- Standardization and governance will harden. Expect crisper scope semantics, registry signing, provenance for resources, and better conformance tests.

- Enterprise agent platforms will converge on shared meshes, where multiple hosts and models share an MCP layer that carries identity, policy, and evidence by default.

- Security posture will shift from “after the edge” to “inside the mesh,” with in-mesh tracing, inline DLP, and per-tool authorization as table stakes.

- Adoption curve is steep. MCP has momentum as a common port for AI apps, yet the biggest friction remains authentication, privacy, and safe default policies. Plan with guardrails from day one.

Conclusion

Agents will scale enterprise AI, and MCP is how they safely reach tools and data. The benefit is higher-order automation and faster capability, the cost is a new in-mesh surface that legacy controls cannot see. The path forward is clear: inventory your runtime, observe agent ↔ MCP ↔ API flows, scope non-human identities, enforce inline policy, and automate testing. If you do that, you get speed without surprises, and a control plane that proves it.

Related: Levo MCP server for deployment patterns and security controls in the wild.

Examples and directories, to explore safely

- Official protocol and quickstarts - concepts, tools, user quickstart for Claude Desktop, and reference servers. (Model Context Protocol, GitHub)

- Reputable directories - Model Context Protocol reference servers on GitHub, npm filesystems, curated “awesome MCP servers,” and large community directories. Evaluate security posture before use. (GitHub, npm, MCP.so)

- Client docs for hosts

- VS Code and GitHub Copilot Chat MCP docs, including dev container config and Visual Studio flows. (Visual Studio Code, GitHub Docs)

- Continue setup and MCP server blocks. (Continue)

- Cursor MCP reference. (Cursor)

- Cline MCP protocol overview and settings. (Cline)

Tip: Treat third party servers like any code dependency. Pin versions, review scopes, and run in sandboxes.

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)